K8S ingress 初体验 - ingress-ngnix 的安装与使用

准备环境

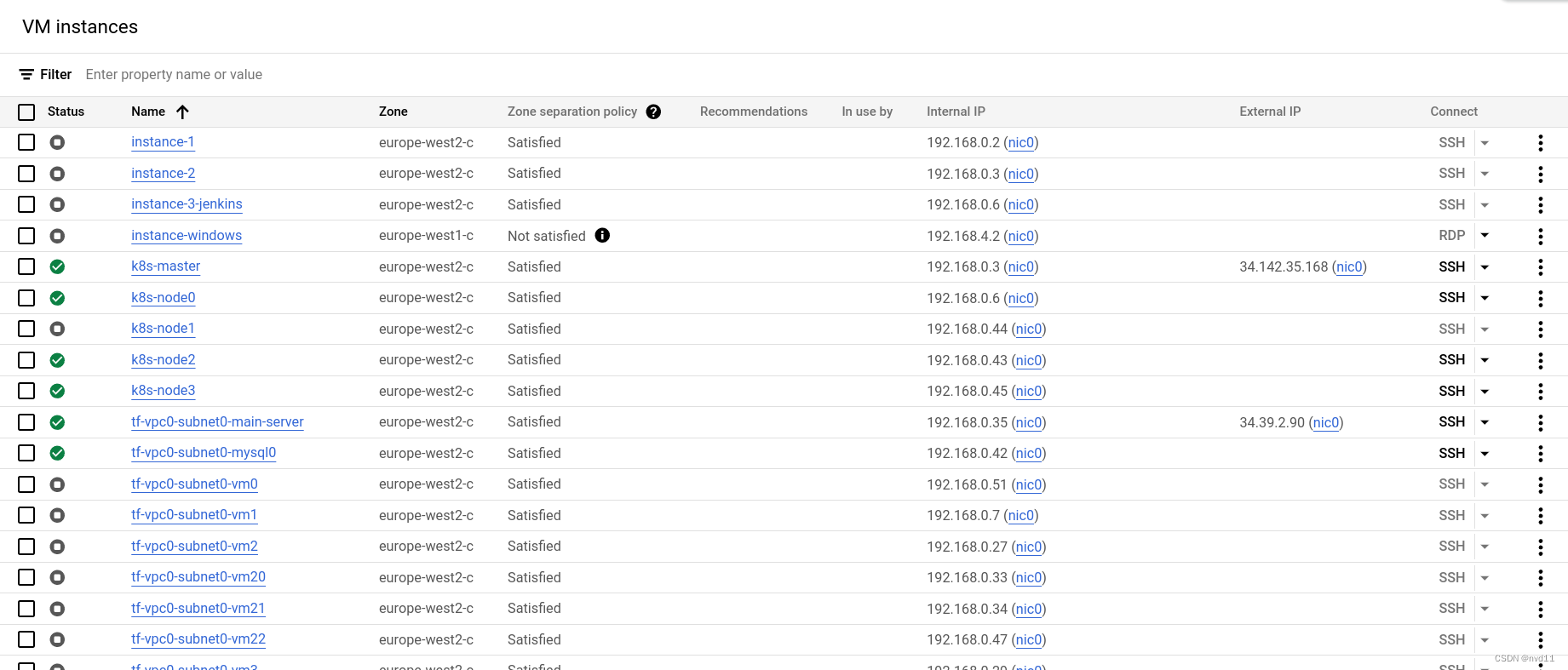

先把 google 的vm 跑起来…

gateman@MoreFine-S500:~/projects/coding/k8s-s/service-case/cloud-user$ kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master Ready control-plane,master 124d v1.23.6 k8s-node0 Ready 124d v1.23.6 k8s-node1 NotReady 124d v1.23.6 k8s-node3 Ready 104d v1.23.6

当前的环境是干净的

gateman@MoreFine-S500:~/projects/coding/k8s-s/service-case/cloud-user$ kubectl get all -o wide NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR service/kubernetes ClusterIP 10.96.0.1 443/TCP 81d

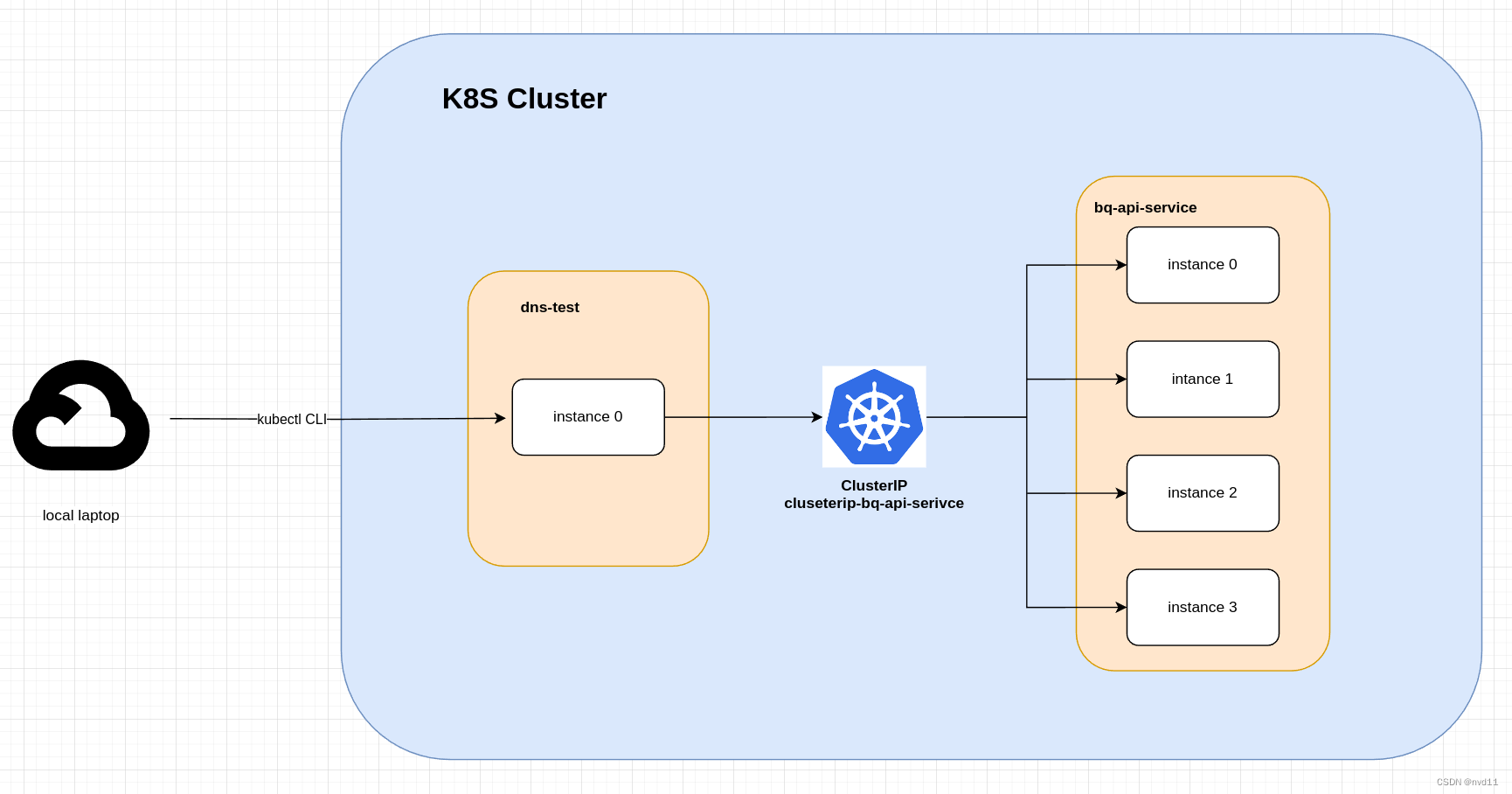

准备1个 micro-service 和 1个clusterIP

大概框架如上图:

组件

micro service

我会先构建1个 微服务 bq-api-service 并部署到集群, 设成4个pods

clusterIP

我会构建1个 ClusterIP service: clusterip-bq-api-service 作为上面微服务的loadbanlance 和 revserse proxy

测试pod dns-test

因为没有nodePort 和 ingress, 只能用测试POD 去尝试通过 clusterip service 链接 bq-api-serivce

部署bq-api-service

deployment-bq-api-service.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels: # label of this deployment

app: bq-api-service # custom defined

author: nvd11

name: deployment-bq-api-service # name of this deployment

namespace: default

spec:

replicas: 4 # desired replica count, Please note that the replica Pods in a Deployment are typically distributed across multiple nodes.

revisionHistoryLimit: 10 # The number of old ReplicaSets to retain to allow rollback

selector: # label of the Pod that the Deployment is managing,, it's mandatory, without it , we will get this error

# error: error validating data: ValidationError(Deployment.spec.selector): missing required field "matchLabels" in io.k8s.apimachinery.pkg.apis.meta.v1.LabelSelector ..

matchLabels:

app: bq-api-service

strategy: # Strategy of upodate

type: RollingUpdate # RollingUpdate or Recreate

rollingUpdate:

maxSurge: 25% # The maximum number of Pods that can be created over the desired number of Pods during the update

maxUnavailable: 25% # The maximum number of Pods that can be unavailable during the update

template: # Pod template

metadata:

labels:

app: bq-api-service # label of the Pod that the Deployment is managing. must match the selector, otherwise, will get the error Invalid value: map[string]string{"app":"bq-api-xxx"}: `selector` does not match template `labels`

spec:

containers:

- image: europe-west2-docker.pkg.dev/jason-hsbc/my-docker-repo/bq-api-service:1.1.7 # image of the container

imagePullPolicy: IfNotPresent

name: bq-api-service-container

env: # set env varaibles

- name: APP_ENVIRONMENT

value: prod

restartPolicy: Always # Restart policy for all containers within the Pod

terminationGracePeriodSeconds: 10 # The period of time in seconds given to the Pod to terminate gracefully

---

apiVersion: v1

kind: Service

metadata:

name: clusterip-bq-api-service

spec:

selector:

app: bq-api-service # for the pods that have the label app: bq-api-service

ports:

- protocol: TCP

port: 8080

targetPort: 8080

type: ClusterIP

执行命令:

gateman@MoreFine-S500:~/projects/coding/k8s-s/ingress/test$ kubectl apply -f bq-api-service.yml deployment.apps/deployment-bq-api-service created service/clusterip-bq-api-service unchanged gateman@MoreFine-S500:~/projects/coding/k8s-s/ingress/test$ kubectl get ep -o wide NAME ENDPOINTS AGE clusterip-bq-api-service 10.244.1.70:8080,10.244.1.71:8080,10.244.2.141:8080 + 1 more... 36s kubernetes 192.168.0.3:6443 83d gateman@MoreFine-S500:~/projects/coding/k8s-s/ingress/test$ kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES deployment-bq-api-service-6f6ffc7866-2mn5n 1/1 Running 0 27s 10.244.2.141 k8s-node0 deployment-bq-api-service-6f6ffc7866-74bcq 1/1 Running 0 27s 10.244.1.70 k8s-node1 deployment-bq-api-service-6f6ffc7866-l2xzb 1/1 Running 0 27s 10.244.1.71 k8s-node1 deployment-bq-api-service-6f6ffc7866-lwxt7 1/1 Running 0 27s 10.244.3.82 k8s-node3

看起来没什么问题

测试cluster ip 的连接

先进入dns-test

gateman@MoreFine-S500:~/projects/coding/k8s-s/ingress/test$ kubectl run dns-test --image=odise/busybox-curl --restart=Never -- /bin/sh -c "while true; do echo hello docker; sleep 1; done" pod/dns-test created gateman@MoreFine-S500:~/projects/coding/k8s-s/ingress/test$ kubectl exec -it dns-test -- /bin/sh / #

测试调用clusterip service

/ # curl clusterip-bq-api-service:8080/actuator/info

{"app":"Sales API","version":"1.1.7","hostname":"deployment-bq-api-service-6f6ffc7866-2mn5n","description":"This is a simple Spring Boot application to demonstrate the use of BigQuery in GCP."}/ #

/ # curl clusterip-bq-api-service:8080/actuator/info

/ # curl clusterip-bq-api-service:8080/actuator/info

{"app":"Sales API","version":"1.1.7","hostname":"deployment-bq-api-service-6f6ffc7866-l2xzb","description":"This is a simple Spring Boot application to demonstrate the use of BigQuery in GCP."}/ #

看来 reverse proxy 和 loadbalance 都生效~

安装helm

下载:

https://github.com/helm/helm/releases

配置~/.bashrc 就好

export PATH=$PATH:/home/gateman/devtools/helm

测试version

gateman@MoreFine-S500:~/projects/coding/k8s-s/ingress/test$ helm version

version.BuildInfo{Version:"v3.15.2", GitCommit:"1a500d5625419a524fdae4b33de351cc4f58ec35", GitTreeState:"clean", GoVersion:"go1.22.4"}

通过helm 安装 ingress-nginx controller

把 ingress-ngnix 的repo 添加到helm

helm repo add ingress-nginx https://kubernetes.github.io/ingress-nginx

查看repo list

gateman@MoreFine-S500:~/projects/coding/k8s-s/ingress/test$ helm repo list NAME URL ingress-nginx https://kubernetes.github.io/ingress-nginx

helm 下载 ingress-nginx package

helm pull

helm pull ingress-nginx/ingress-nginx

这时, 会下载1个tgz 文件 ingress-nginx-4.10.1.tgz

解压:

gateman@MoreFine-S500:~/projects/coding/k8s-s/ingress/ingress-nginx/helm/ingress-nginx$ ls -l total 132 drwxrwxr-x 2 gateman gateman 4096 6月 25 01:26 changelog -rw-r--r-- 1 gateman gateman 752 4月 26 22:02 Chart.yaml drwxrwxr-x 2 gateman gateman 4096 6月 25 01:26 ci -rw-r--r-- 1 gateman gateman 177 4月 26 22:02 OWNERS -rw-r--r-- 1 gateman gateman 49134 4月 26 22:02 README.md -rw-r--r-- 1 gateman gateman 11358 4月 26 22:02 README.md.gotmpl drwxrwxr-x 3 gateman gateman 4096 6月 25 01:26 templates drwxrwxr-x 2 gateman gateman 4096 6月 25 01:26 tests -rw-r--r-- 1 gateman gateman 45136 6月 25 02:02 values.yaml

其中values.yaml 就是最终要的配置文件

修改values.yaml

有些教程让我们修改 image repo 的 address 指向阿里云加速, 由于我的server在google cloud 就忽略这一步了。

-

controller.kind -> 由 Deployment 改成 DaemonSet

这样做的优点是

a. 能够保证每个Node节点上都运行一个 ingress-nginx Pod实例,实现全集群访问入口。(实际上我们会用label 只让ingress-nginx 部署在1台node上)

b. 节点故障转移时,新的Node上会自动启动Pod,保证入口始终可用。

-

controller.hostnetwork -> 由false 改成 true

这是DaemonSet的默认网络模式。每个Pod将直接共享Node的网络栈和资源,简化了网络配置。

但同时也会带来上面提到的一些隔离性和安全性问题。

-

controller.dnsPolic-> 由 ClusterFirst 改成 ClusterFirstWithHostNet

ClusterFirst表示使用集群提供的DNS服务进行解析,这也是默认策略。

但是DaemonSet使用hostNetwork模式后,Pod会直接使用宿主机的网络命名空间。

此时设置dnsPolicy=ClusterFirstWithHostNet表示:

首先使用集群的DNS服务来解析域名。

如果集群DNS无法响应,则解析请求会转发到宿主机的DNS服务上寻求响应。

这样可以实现两个目的:

使用集群的DNS服务提高可配置性和维护性。

同时如果集群DNSdown掉,Pod也可以fallback到宿主机本地DNS上,保证关键服务名能被解析到。

这相比直接使用宿主机DNS或仅使用集群DNS更加灵活。

因此对于使用hostNetwork的DaemonSet,dnsPolicy设置为ClusterFirstWithHostNet可以充分利用集群和宿主机的DNS功能,提高服务可用性。

-

controller.service.type -> 由 Loadbalancer 改成 ClusterIP

对于用Helm部署ingress-nginx,使用ClusterIP作为service类型通常是更好的选择。

LoadBalancer类型会要求底层基础设施如云平台自动为ingress分配公网IP地址,但这可能会产生额外的网络开支。

考虑到大多数K8S集群运行在内网环境,使用ClusterIP就可以满足需求。后续还可以配置支持内网访问的解决方案,如NodePort或External IPs。

一般来说,你可以直接在values.yaml里修改service.type为ClusterIP:

在部署 ingress-nginx controller 之前(我们已经把它改成1个DaemonSet), 为它创建1个单独的namespace

kubectl create ns ingress-nginx

我们之后会单独地把 ingress-ingress 部署在这个 同名字的namespace 上面。

问题来了, 在命名空间A的 ingress controller 可以用与命名空间B的ingress 实例吗?

答案是yes:

关于Ingress controller namespace与Ingress资源namespace的关系: 1. Ingress controller一般会部署在单独的namespace下,比如ingress-namespace。 2. Ingress资源可以定义在任何namespace中。 3. Kubernetes会检测所有namespace下是否有Ingress Controller的服务运行。 如果有,则Ingress资源对应的namespace会自动重定向到Ingress controller所在的namespace。 Ingress controller通过监视Apiserver,可以查询到集群内所有namespace下定义的Ingress资源。 也就是说: 1. Ingress controller和Ingress资源可以部署在不同的namespace。 2. Kubernetes会自动帮助Ingress资源找到部署在哪个namespace的Ingress controller。 3. Ingress controller 通过Apiserver可以查到所有namespace下的Ingress定义。

为所在node添加label

ingress-controller 作为1个类网关程序, 理论上只在其中1个node 部署就够了

由于我们修改了value.yaml, 把这个controll的类型改成了DaemonSet 所以, 理论上每个node 都会部署1个pod, 是有资源浪费的。

而实际上, value.yaml 有定义了1个规则- node selector, 只有具有某个label ingress=true 的node 才会部署

node selector 的定义:

controller.nodeSelector:

nodeSelector: # nodeSelector of DaemonSet we updated

kubernetes.io/os: linux

ingress: "true" # use String instead of bool

所以我们只需要为1台node 添加这个label 就好

但是, 如果我们只给master node添加label是不work的, 因为master node 配置了污点, 会bypass 大部分 deployment/DaemonSet 的部署

这次我们只给k8s-node0 这个node 添加label

gateman@MoreFine-S500:~/projects/coding/k8s-s/ingress/ingress-nginx/helm/ingress-nginx$ kubectl label no k8s-node0 ingress=true node/k8s-node0 labeled

查看label

gateman@MoreFine-S500:~/tmp/ingress-nginx$ kubectl get nodes --show-labels=true NAME STATUS ROLES AGE VERSION LABELS k8s-master Ready control-plane,master 128d v1.23.6 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,ingress=true,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-master,kubernetes.io/os=linux,node-role.kubernetes.io/control-plane=,node-role.kubernetes.io/master=,node.kubernetes.io/exclude-from-external-load-balancers= k8s-node0 Ready 128d v1.23.6 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,ingress=true,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-node0,kubernetes.io/os=linux k8s-node1 Ready 128d v1.23.6 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-node1,kubernetes.io/os=linux k8s-node3 Ready 108d v1.23.6 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-node3,kubernetes.io/os=linux

部署ingress-inginx controller

进入之前解压的folder (value.yaml)的上一层

执行

helm install nginx

gateman@MoreFine-S500:~/projects/coding/k8s-s/ingress/ingress-nginx/helm/ingress-nginx$ helm install ingress-nginx -n ingress-nginx . NAME: ingress-nginx LAST DEPLOYED: Tue Jun 25 02:16:57 2024 NAMESPACE: ingress-nginx STATUS: deployed REVISION: 1 TEST SUITE: None NOTES: The ingress-nginx controller has been installed.

检查pods

gateman@MoreFine-S500:~/tmp/ingress-nginx$ kubectl get po -o wide -n ingress-nginx NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES ingress-nginx-controller-72dmb 1/1 Running 3 (2d18h ago) 6d20h 192.168.0.6 k8s-node0

可见这个ingress-controller 已经运行在了 k8s-node0

创建1个ingress 资源

创建yaml

yaml file:

apiVersion: networking.k8s.io/v1

kind: Ingress # it is a resource name of k8s

metadata:

name: ingress-nginx-instance-0 # the ingress name

namespace: default # the namespace of the ingress

annotations: # Ingress frequently uses annotations to configure some options depending on the Ingress controller,

# an example of which is the rewrite-target annotation. Different Ingress controllers support different annotations.

kubernetes.io/ingress.class: nginx # it will search the ingress controller with the same class

spec: # spec means specification, it is the main part of the Ingress resource

rules: # rules is a list of host rules used to configure the Ingress controller

- host: "www.example.com" # host is the domain name of the Ingress controller, it could be a wildcard, but must start with a wildcard * , e.g. *.example.com

http: # http is a list of http rules used to configure the Ingress controller for HTTP traffic, the port is 80, cannot be changed for ingress-nginx

paths: # Equivalent to the location setting of nginx, cuold be multiple

- pathType: Prefix # supports Prefix and Extract and ImplementationSpecific

backend: # backend is the service to forward the request to

service: # Usually the cluster-ip

name: clusterip-bq-api-service

port:

number: 8080

path: /actuator # Equivalent to the location setting of nginx

上面是1个最简单的ingress resource, 基本上我每一行都加上了注解

值得注意的有如下几点

- 既然有metadata, 为什么还需要annotations?

的确两者都可以用来存储一些元数据,但是metadata是k8s的内建字段,而annotations是用户自定义的字段, 而在ingress 中,annotation 的配置是非常重要的,因为不同的ingress controller 支持的annotation是不一样的,所以annotations是必须的

- pathType: 这里我只用了Prefix, prefix 也是sprint cloud gateway 常用的类型, 但是在ingress-nginx 中更常用的是ImplementationSpecific, 后面会表述原因

- 这个配置的规则是 ingress:80 -> clusterip:8080 其中ingress-nginx 的端口是不支持修改的, 要么是http 80, 要么是tls 443

执行创建命令

$ kubectl delete ingress ingress-nginx-instance-0 ingress.networking.k8s.io "ingress-nginx-instance-0" deleted

查看ingress 信息和测试

首先 浏览一下这个创建号的ingress

gateman@MoreFine-S500:~/projects/coding/k8s-s/ingress/ingress-nginx/ingress-resource$ kubectl get ingress -o wide

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress-nginx-instance-0 www.example.com 10.107.95.68 80 53m

gateman@MoreFine-S500:~/projects/coding/k8s-s/ingress/ingress-nginx/ingress-resource$ kubectl describe ingress ingress-nginx-instance-0

Name: ingress-nginx-instance-0

Labels:

Namespace: default

Address: 10.107.95.68

Ingress Class:

Default backend:

Rules:

Host Path Backends

---- ---- --------

www.example.com

/actuator clusterip-bq-api-service:8080 (10.244.2.146:8080,10.244.2.147:8080,10.244.3.86:8080 + 1 more...)

Annotations: kubernetes.io/ingress.class: nginx

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Sync 52m (x2 over 53m) nginx-ingress-controller Scheduled for sync

可以见到这个ingress 被分配了容器内ip

我们试下是否能在容器内调用

gateman@MoreFine-S500:~/projects/coding/k8s-s/ingress/ingress-nginx/ingress-resource$ kubectl exec -it dns-test -- /bin/sh / # ping 10.107.95.68 PING 10.107.95.68 (10.107.95.68): 56 data bytes ^C --- 10.107.95.68 ping statistics --- 11 packets transmitted, 0 packets received, 100% packet loss / # curl 10.107.95.68/actuator/info 404 Not Found404 Not Found

nginx / #

这个ip 在无法在容器内ping 通

但是能通过curl 调用, 因为nginx 规则, 必须用指定域名 www.example.com (见上面的yaml 配置)

无法调用成功

我们在容器内 set 一下hostname:

10.107.95.68 www.example.com

调用成功:

/ # nslookup www.example.com

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: www.example.com

Address 1: 10.107.95.68 www.example.com

/ # curl www.example.com/actuator/info

{"app":"Sales API","version":"1.1.7","hostname":"deployment-bq-api-service-6f6ffc7866-g4854","description":"This is a simple Spring Boot application to demonstrate the use of BigQuery in GCP."}

在集群外的server call ingress

上面我们 edit 容器内的/etc/hosts match了 10.107.95.68 www.example.com

但是这个ip 在容器外的server 特别是集群内的server 是无法访问的。

那么集群外的server 如何访问ingress呢

这时我们登陆 ingress-nginx controller 所create的pod, 所在的node

gateman@MoreFine-S500:~/projects/coding/k8s-s/ingress/ingress-nginx/ingress-resource$ kubectl get pods --all-namespaces -o wide | grep ingress ingress-nginx ingress-nginx-controller-72dmb 1/1 Running 4 (8d ago) 15d 192.168.0.6 k8s-node0

查看k8s-node0 的端口占用

gateman@k8s-node0:~$ sudo ss -tunlp | grep nginx

tcp LISTEN 0 4096 0.0.0.0:443 0.0.0.0:* users:(("nginx",pid=67247,fd=23),("nginx",pid=67246,fd=23),("nginx",pid=67245,fd=23),("nginx",pid=67244,fd=23),("nginx",pid=3823,fd=23))

tcp LISTEN 0 4096 0.0.0.0:443 0.0.0.0:* users:(("nginx",pid=67247,fd=22),("nginx",pid=67246,fd=22),("nginx",pid=67245,fd=22),("nginx",pid=67244,fd=22),("nginx",pid=3823,fd=22))

tcp LISTEN 0 4096 0.0.0.0:443 0.0.0.0:* users:(("nginx",pid=67247,fd=21),("nginx",pid=67246,fd=21),("nginx",pid=67245,fd=21),("nginx",pid=67244,fd=21),("nginx",pid=3823,fd=21))

tcp LISTEN 0 4096 0.0.0.0:443 0.0.0.0:* users:(("nginx",pid=67247,fd=9),("nginx",pid=67246,fd=9),("nginx",pid=67245,fd=9),("nginx",pid=67244,fd=9),("nginx",pid=3823,fd=9))

tcp LISTEN 0 4096 127.0.0.1:10245 0.0.0.0:* users:(("nginx-ingress-c",pid=2207,fd=7))

tcp LISTEN 0 511 127.0.0.1:10246 0.0.0.0:* users:(("nginx",pid=67247,fd=13),("nginx",pid=67246,fd=13),("nginx",pid=67245,fd=13),("nginx",pid=67244,fd=13),("nginx",pid=3823,fd=13))

tcp LISTEN 0 511 127.0.0.1:10247 0.0.0.0:* users:(("nginx",pid=67247,fd=14),("nginx",pid=67246,fd=14),("nginx",pid=67245,fd=14),("nginx",pid=67244,fd=14),("nginx",pid=3823,fd=14))

tcp LISTEN 0 4096 0.0.0.0:80 0.0.0.0:* users:(("nginx",pid=67247,fd=17),("nginx",pid=67246,fd=17),("nginx",pid=67245,fd=17),("nginx",pid=67244,fd=17),("nginx",pid=3823,fd=17))

tcp LISTEN 0 4096 0.0.0.0:80 0.0.0.0:* users:(("nginx",pid=67247,fd=16),("nginx",pid=67246,fd=16),("nginx",pid=67245,fd=16),("nginx",pid=67244,fd=16),("nginx",pid=3823,fd=16))

tcp LISTEN 0 4096 0.0.0.0:80 0.0.0.0:* users:(("nginx",pid=67247,fd=15),("nginx",pid=67246,fd=15),("nginx",pid=67245,fd=15),("nginx",pid=67244,fd=15),("nginx",pid=3823,fd=15))

tcp LISTEN 0 4096 0.0.0.0:80 0.0.0.0:* users:(("nginx",pid=67247,fd=7),("nginx",pid=67246,fd=7),("nginx",pid=67245,fd=7),("nginx",pid=67244,fd=7),("nginx",pid=3823,fd=7))

tcp LISTEN 0 4096 0.0.0.0:8181 0.0.0.0:* users:(("nginx",pid=67247,fd=29),("nginx",pid=67246,fd=29),("nginx",pid=67245,fd=29),("nginx",pid=67244,fd=29),("nginx",pid=3823,fd=29))

tcp LISTEN 0 4096 0.0.0.0:8181 0.0.0.0:* users:(("nginx",pid=67247,fd=28),("nginx",pid=67246,fd=28),("nginx",pid=67245,fd=28),("nginx",pid=67244,fd=28),("nginx",pid=3823,fd=28))

tcp LISTEN 0 4096 0.0.0.0:8181 0.0.0.0:* users:(("nginx",pid=67247,fd=27),("nginx",pid=67246,fd=27),("nginx",pid=67245,fd=27),("nginx",pid=67244,fd=27),("nginx",pid=3823,fd=27))

tcp LISTEN 0 4096 0.0.0.0:8181 0.0.0.0:* users:(("nginx",pid=67247,fd=11),("nginx",pid=67246,fd=11),("nginx",pid=67245,fd=11),("nginx",pid=67244,fd=11),("nginx",pid=3823,fd=11))

tcp LISTEN 0 4096 *:8443 *:* users:(("nginx-ingress-c",pid=2207,fd=12))

tcp LISTEN 0 4096 [::]:443 [::]:* users:(("nginx",pid=67247,fd=10),("nginx",pid=67246,fd=10),("nginx",pid=67245,fd=10),("nginx",pid=67244,fd=10),("nginx",pid=3823,fd=10))

tcp LISTEN 0 4096 [::]:443 [::]:* users:(("nginx",pid=67247,fd=24),("nginx",pid=67246,fd=24),("nginx",pid=67245,fd=24),("nginx",pid=67244,fd=24),("nginx",pid=3823,fd=24))

tcp LISTEN 0 4096 [::]:443 [::]:* users:(("nginx",pid=67247,fd=25),("nginx",pid=67246,fd=25),("nginx",pid=67245,fd=25),("nginx",pid=67244,fd=25),("nginx",pid=3823,fd=25))

tcp LISTEN 0 4096 [::]:443 [::]:* users:(("nginx",pid=67247,fd=26),("nginx",pid=67246,fd=26),("nginx",pid=67245,fd=26),("nginx",pid=67244,fd=26),("nginx",pid=3823,fd=26))

tcp LISTEN 0 4096 *:10254 *:* users:(("nginx-ingress-c",pid=2207,fd=11))

tcp LISTEN 0 4096 [::]:80 [::]:* users:(("nginx",pid=67247,fd=8),("nginx",pid=67246,fd=8),("nginx",pid=67245,fd=8),("nginx",pid=67244,fd=8),("nginx",pid=3823,fd=8))

tcp LISTEN 0 4096 [::]:80 [::]:* users:(("nginx",pid=67247,fd=18),("nginx",pid=67246,fd=18),("nginx",pid=67245,fd=18),("nginx",pid=67244,fd=18),("nginx",pid=3823,fd=18))

tcp LISTEN 0 4096 [::]:80 [::]:* users:(("nginx",pid=67247,fd=19),("nginx",pid=67246,fd=19),("nginx",pid=67245,fd=19),("nginx",pid=67244,fd=19),("nginx",pid=3823,fd=19))

tcp LISTEN 0 4096 [::]:80 [::]:* users:(("nginx",pid=67247,fd=20),("nginx",pid=67246,fd=20),("nginx",pid=67245,fd=20),("nginx",pid=67244,fd=20),("nginx",pid=3823,fd=20))

tcp LISTEN 0 4096 [::]:8181 [::]:* users:(("nginx",pid=67247,fd=12),("nginx",pid=67246,fd=12),("nginx",pid=67245,fd=12),("nginx",pid=67244,fd=12),("nginx",pid=3823,fd=12))

tcp LISTEN 0 4096 [::]:8181 [::]:* users:(("nginx",pid=67247,fd=30),("nginx",pid=67246,fd=30),("nginx",pid=67245,fd=30),("nginx",pid=67244,fd=30),("nginx",pid=3823,fd=30))

tcp LISTEN 0 4096 [::]:8181 [::]:* users:(("nginx",pid=67247,fd=31),("nginx",pid=67246,fd=31),("nginx",pid=67245,fd=31),("nginx",pid=67244,fd=31),("nginx",pid=3823,fd=31))

tcp LISTEN 0 4096 [::]:8181 [::]:* users:(("nginx",pid=67247,fd=32),("nginx",pid=67246,fd=32),("nginx",pid=67245,fd=32),("nginx",pid=67244,fd=32),("nginx",pid=3823,fd=32))

可以见到已经有nginx 的worker 在监听80端口了

实际上通过 访问k8s-node0 80端口 来放问ingress

登陆一台集群外主机 (仍在同1个vpc-network)

gcloud compute ssh tf-vpc0-subnet0-main-server

edit /etc/host

gateman@tf-vpc0-subnet0-main-server:~$ sudo vi /etc/hosts gateman@tf-vpc0-subnet0-main-server:~$ cat /etc/hosts 127.0.0.1 localhost ::1 localhost ip6-localhost ip6-loopback ff02::1 ip6-allnodes ff02::2 ip6-allrouters 192.168.1.5 tf-vpc0-subnet1-vm1 192.168.0.42 tf-vpc0-subnet0-mysql0 192.168.0.3 k8s-master 192.168.0.35 tf-vpc0-subnet0-main-server.c.jason-hsbc.internal tf-vpc0-subnet0-main-server # Added by Google 169.254.169.254 metadata.google.internal # Added by Google 192.168.0.6 www.example.com gateman@tf-vpc0-subnet0-main-server:~$

其中192.168.0.6 就是k8s-node0的ip

测试

用ip 和 k8s-node0 这个域名都是无法call通的

gateman@tf-vpc0-subnet0-main-server:~$ curl 192.168.0.6/actuator/info 404 Not Found404 Not Found

nginx gateman@tf-vpc0-subnet0-main-server:~$ curl k8s-node0/actuator/info 404 Not Found404 Not Found

nginx

但是用www.example.com 就可以了:

gateman@tf-vpc0-subnet0-main-server:~$ curl www.example.com/actuator/info

{"app":"Sales API","version":"1.1.7","hostname":"deployment-bq-api-service-6f6ffc7866-g4854","description":"This is a simple Spring Boot application to demonstrate the use of BigQuery in GCP."}

框架图

到这里我们已经基本实现了ingress 的作用, 让1台k8s 内网外的server 访问k8s 内的pods 部署的api

注意k8s 外部网络主机访问ingress 并不是直接访问ingress 而是 访问Ingress controller 所在node 的ip

path 重定向

需求

假设上面的例子 1个ingress 只对1个service 上面的写法在此情况下是无问题的。

但是假如1个ingress 对两个service 就不行了

假如 service a 和 service b 都有两个相同的path 的api

e.g. 都有 /actuator/info

我希望

ingress 的 /service-a/actuator --> service a 的/actuator** , /service-b/actuator --> service b 的/actuator**

先试下简单粗暴的写法:

apiVersion: networking.k8s.io/v1

kind: Ingress # it is a resource name of k8s

metadata:

name: ingress-nginx-instance-0 # the ingress name

namespace: default # the namespace of the ingress

annotations: # Ingress frequently uses annotations to configure some options depending on the Ingress controller,

# an example of which is the rewrite-target annotation. Different Ingress controllers support different annotations.

kubernetes.io/ingress.class: nginx # it will search the ingress controller with the same class

spec: # spec means specification, it is the main part of the Ingress resource

rules: # rules is a list of host rules used to configure the Ingress controller

- host: "www.example.com" # host is the domain name of the Ingress controller, it could be a wildcard, but must start with a wildcard * , e.g. *.example.com

http: # http is a list of http rules used to configure the Ingress controller for HTTP traffic, the port is 80, cannot be changed for ingress-nginx

paths: # Equivalent to the location setting of nginx, cuold be multiple

- pathType: Prefix # supports Prefix and Extract and ImplementationSpecific

backend: # backend is the service to forward the request to

service: # Usually the cluster-ip

name: clusterip-bq-api-service

port:

number: 8080

path: /bq-api-service/actuator # change here

测试, 是不行的, 因为 bq-api-service 根本没有/bq-api-service 开头的api

gateman@tf-vpc0-subnet0-main-server:~$ curl www.example.com/actuator/info 404 Not Found404 Not Found

nginx gateman@tf-vpc0-subnet0-main-server:~$ curl www.example.com/bq-api-service/actuator/info {"timestamp":"2024-07-11T16:57:59.849+00:00","status":404,"error":"Not Found","message":"No message available","path":"/bq-api-service/actuator/info"}

nginx.ingress.kubernetes.io/rewrite-target

查阅官方文档

我们可以通过1个 annotation 实现这个功能

改写成

apiVersion: networking.k8s.io/v1

kind: Ingress # it is a resource name of k8s

metadata:

name: ingress-nginx-instance-0 # the ingress name

namespace: default # the namespace of the ingress

annotations: # Ingress frequently uses annotations to configure some options depending on the Ingress controller,

# an example of which is the rewrite-target annotation. Different Ingress controllers support different annotations.

kubernetes.io/ingress.class: nginx # it will search the ingress controller with the same class

nginx.ingress.kubernetes.io/rewrite-target: /$2 # rewrite the url let ingress/bq-api-service/xxx point to backend:8080/xxx

spec: # spec means specification, it is the main part of the Ingress resource

rules: # rules is a list of host rules used to configure the Ingress controller

- host: "www.example.com" # host is the domain name of the Ingress controller, it could be a wildcard, but must start with a wildcard * , e.g. *.example.com

http: # http is a list of http rules used to configure the Ingress controller for HTTP traffic, the port is 80, cannot be changed for ingress-nginx

paths: # Equivalent to the location setting of nginx, cuold be multiple

- pathType: ImplementationSpecific # supports Prefix and Extract and ImplementationSpecific

# if we need to use rewrite-target, we must use ImplementationSpecific, because ony this could support regular expression path

backend: # backend is the service to forward the request to

service: # Usually the cluster-ip

name: clusterip-bq-api-service

port:

number: 8080

path: /bq-api-service(/|$)(.*) # Equivalent to the location setting of nginx

# means if the url is /bq-api-service/xxx, it will forward to backend:8080/xxx

# (/|$) means / or line end

# /bq-api-service(/|$)(.*) with /$2 , here $2 means the second Capture groups, the (.*) part

# we could also use /bq-api-service(.*) with $1, please note here $1 do not have / before it

请注意我改了3个地方

- pathType 由 prefix -> ImplementationSpecific , 因为只有ImplementationSpecific 支持正则表达式 的path

- 添加 annotation nginx.ingress.kubernetes.io/rewrite-target: /$2 这里表示 正则表达式path 的第2 捕获组

- path: /bq-api-service(/|$)(.*) 这里改成了正则表达式

这里再详细解释正则表达式的意思

(/|$) 表示 bq-api-service 后面可以跟着/ 或者 行结束符号, 注意这里的$ 并不是$字符, 而是表示行结束了 这里是第1个捕获组

(.*) 表示任何字符 第2个捕获组

当 传入/bq-api-service 时是可以匹配的 因为后面跟着行结束符号, 这时(.*)就什么都不是了 /$2 就是/

当掺入/bq-api-service/aaa/bb/ccc时 $2 就是aaa/bb/ccc 而/$2 就是 /aaa/bbb/cc

实际上 我们用 /bq-api-service(.*) 和 $1 (注意并不是/$1) 亲测效果是一样的, 由于官方推荐的是上面的写法, 我们就随官方文档

测试

测试通过

gateman@tf-vpc0-subnet0-main-server:~$ curl www.example.com/bq-api-service/actuator/info

{"app":"Sales API","version":"1.1.7","hostname":"deployment-bq-api-service-6f6ffc7866-lwxt7","description":"This is a simple Spring Boot application to demonstrate the use of BigQuery in GCP."}

gateman@tf-vpc0-subnet0-main-server:~$ curl www.example.com/bq-api-service/actuator/info

{"app":"Sales API","version":"1.1.7","hostname":"deployment-bq-api-service-6f6ffc7866-mxwcq","description":"This is a simple Spring Boot application to demonstrate the use of BigQuery in GCP."}

在公网访问ingress

思路

由于我的ingress 所在的k8s-node0 是没有公网ip的 所以从公网无法直接访问

简单粗暴的方法当然是为k8s-node0 配1个公网ip, 但这并不是1个stretagy solution

思路

找一台有公网ip的 , 并且在同1个vpc-network 的主机(双网卡), tf-vpc0-subnet0-main-service , 在上面配1个nginx 代理, 指向ingress 的node k8s-node0

gateman@MoreFine-S500:~/projects/coding/k8s-s/ingress/ingress-nginx/ingress-resource$ gcloud compute instances list NAME ZONE MACHINE_TYPE PREEMPTIBLE INTERNAL_IP EXTERNAL_IP STATUS k8s-master europe-west2-c n2d-highmem-2 true 192.168.0.3 RUNNING k8s-node0 europe-west2-c n2d-highmem-4 true 192.168.0.6 RUNNING k8s-node1 europe-west2-c n2d-highmem-4 true 192.168.0.44 RUNNING k8s-node2 europe-west2-c n2d-highmem-4 true 192.168.0.43 TERMINATED k8s-node3 europe-west2-c n2d-highmem-4 true 192.168.0.45 RUNNING tf-vpc0-subnet0-main-server europe-west2-c n2d-standard-4 true 192.168.0.35 34.39.2.90 RUNNING

框架图

配置域名

首先 为公网ip的主机配置1个域名

www.jp-gcp-vms.cloud

至于这个域名怎么来的, 当然是付费的了, 十几块第一年

前提是你的云主机有1个固定ip

在公网主机配置方向代理

前提要装个ingress 啦

8085_k8s_ingress.conf

upstream k8s-ingress-server {

server k8s-node0:80;

}

server {

listen 8085;

server_name www.jp-gvms.cloud;

location / {

proxy_pass http://k8s-ingress-server;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

}

配置是很简单的

就是通过公网 域名 www.jp-gcp-vms.cloud 访问8085 端口时, http request 会转发到 k8s-node0:80

其中k8s-node0 就是gcp vm的创建是指定的默认内部dns name

具体配置反向代理入门教程参考我另一篇文章:

Nginx 配置反向代理 - part 3

重新配置ingress yaml

apiVersion: networking.k8s.io/v1

kind: Ingress # it is a resource name of k8s

metadata:

name: ingress-nginx-instance-0 # the ingress name

namespace: default # the namespace of the ingress

annotations: # Ingress frequently uses annotations to configure some options depending on the Ingress controller,

# an example of which is the rewrite-target annotation. Different Ingress controllers support different annotations.

kubernetes.io/ingress.class: nginx # it will search the ingress controller with the same class

nginx.ingress.kubernetes.io/rewrite-target: /$2 # rewrite the url let ingress/bq-api-service/xxx point to backend:8080/xxx

spec: # spec means specification, it is the main part of the Ingress resource

rules: # rules is a list of host rules used to configure the Ingress controller

- host: "www.example.com" # host is the domain name of the Ingress controller, it could be a wildcard, but must start with a wildcard * , e.g. *.example.com

http: # http is a list of http rules used to configure the Ingress controller for HTTP traffic, the port is 80, cannot be changed for ingress-nginx

paths: # Equivalent to the location setting of nginx, cuold be multiple

- pathType: ImplementationSpecific # supports Prefix and Extract and ImplementationSpecific

# if we need to use rewrite-target, we must use ImplementationSpecific, because ony this could support regular expression path

backend: # backend is the service to forward the request to

service: # Usually the cluster-ip

name: clusterip-bq-api-service

port:

number: 8080

path: /bq-api-service(/|$)(.*) # Equivalent to the location setting of nginx

# means if the url is /bq-api-service/xxx, it will forward to backend:8080/xxx

# (/|$) means / or line end

# /bq-api-service(/|$)(.*) with /$2 , here $2 means the second Capture groups, the (.*) part

# we could also use /bq-api-service(.*) with $1, please note here $1 do not have / before it

- host: "k8s-node0"

http:

paths: # Equivalent to the location setting of nginx, cuold be multiple

- pathType: ImplementationSpecific # supports Prefix and Exact

backend:

service:

name: clusterip-bq-api-service

port:

number: 8080

path: /bq-api-service(/|$)(.*) # Equivalent to the location setting of nginx

既然公网nginx 规则是由 www.jp-gcp-vms.cloud:8085 转发到 k8s-node0:80

那么我在ingress 配置多1个rules 就行了?

其中host: “www.example.com” for 内网访问

host: “k8s-node0” for 公网访问

看起来完美

实际上:

并不能匹配

大坑

trouble shooting

原因是上面nginx 设置

proxy_set_header Host $host;

把公网browser 访问的域名带过去了

但是ingress 并没有这个域名的配置

解决方法一:

在ingress 设置中把

- host: “k8s-node0” 改成 - host: “www.jp-gcp-vms.cloud”

亲测可行

但是

这样把域名同时设置在nginx 反向代理 和 ingress 中, 太不完美了

解决方法二:

把proxy_set_header Host $host; 去掉

upstream k8s-ingress-server { server k8s-node0:80; } server { listen 8085; server_name www.jp-gvms.cloud; location / { proxy_pass http://k8s-ingress-server; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; } }亲测失败, 默认就会有header HOST 把用户访问的域名带过去了, 大坑

解决方法三:

强制指定 带过去的header Host 的值为 k8s-node0

upstream k8s-ingress-server { server k8s-node0:80; } server { listen 8085; server_name www.jp-gvms.cloud; location / { proxy_pass http://k8s-ingress-server; proxy_set_header Host k8s-node0; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; } }这次测试也可以

这是本人找到最合适的方法了!

最后, 一些问题

-

1个ingress controller 可以对应多个ingress 吗?

答: 可以的

-

如果1个ingress 的controller 配有多个ingress instance, 里面的配置会冲突吗? 如果有相同的path 设置

答: 会冲突, 所以要保证多个ingress instance 的配置中 path 互相不重复

如果一个 Ingress 控制器对应两个 Ingress 资源,并且这两个 Ingress 的配置中具有相同的路径,那么会发生冲突。 Ingress 资源的路径配置用于定义请求的路径匹配规则,以确定将请求转发到哪个后端服务。如果存在多个 Ingress 资源具有相同的路径配置,Ingress 控制器将无法确定将请求路由到哪个后端服务,从而导致冲突。 为了避免冲突,应确保每个 Ingress 资源的路径配置是唯一且不重叠的。这可以通过更改路径配置,使其在不同的 Ingress 资源中具有不同的值,或者通过使用其他条件(例如主机名)来进一步区分路径配置。 如果需要为相同的路径配置创建多个 Ingress 资源,您可以考虑使用不同的主机名来区分它们,或者使用其他条件来进行区分,例如使用不同的路径前缀来将请求路由到不同的后端服务。 总之,确保每个 Ingress 资源的路径配置是唯一的,以避免冲突并使 Ingress 控制器能够正确地路由请求到相应的后端服务。

-