Langchain-Chatchat-Ubuntu服务器本地安装部署笔记

Langchain-Chatchat(原Langchain-ChatGLM)基于 Langchain 与 ChatGLM 等语言模型的本地知识库问答 | Langchain-Chatchat (formerly langchain-ChatGLM), local knowledge based LLM (like ChatGLM) QA app with langchain。

开源网址:https://github.com/chatchat-space/Langchain-Chatchat

因为这是自己毕设项目所需,利用虚拟机实验一下是否能成功部署。项目参考:Langchain-Chatchat-win10本地安装部署成功笔记(CPU)_file "d:\ai\virtual-digital-human\langchain-chatch-CSDN博客

其中有些是自己遇到的坑也会在这里说一下。

一、实验环境

可以查看目前使用的系统版本信息。

cat /proc/version

Linux version 5.15.133.1-microsoft-standard-WSL2 (root@1c602f52c2e4) (gcc (GCC) 11.2.0, GNU ld (GNU Binutils) 2.37) #1 SMP Thu Oct 5 21:02:42 UTC 2023

如果安装有显卡驱动,可以使用下面的代码来查看显卡信息。

nvidia-smi #查看显卡信息

Sat Mar 9 19:31:33 2024

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 550.40.06 Driver Version: 551.23 CUDA Version: 12.4 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA GeForce RTX 3090 On | 00000000:AF:00.0 Off | N/A |

| 32% 25C P8 6W / 350W | 134MiB / 24576MiB | 0% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

| 1 NVIDIA GeForce RTX 3090 On | 00000000:D8:00.0 Off | N/A |

| 32% 24C P8 11W / 350W | 144MiB / 24576MiB | 0% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| 0 N/A N/A 227 G /Xwayland N/A |

| 1 N/A N/A 227 G /Xwayland N/A |

+-----------------------------------------------------------------------------------------+

二、安装步骤

1、安装 Anaconda软件,用于管理python虚拟环境

自己使用的是清华镜像:Index of /anaconda/archive/ | 清华大学开源软件镜像站 | Tsinghua Open Source Mirror

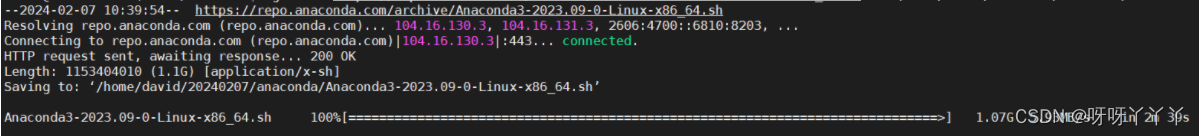

wget下载命令如下:

wget -c 'https://repo.anaconda.com/archive/Anaconda3-2023.09-0-Linux-x86_64.sh' -P

2、创建python运行虚拟环境

创建 conda 环境

conda create -n chatchat python=3.11.7

可以通过 conda info --envs 检查环境是否创建完成。

# conda environments: # base /home/david/anaconda3 NAD /home/david/anaconda3/envs/NAD chat /home/david/anaconda3/envs/chat chat_demo /home/david/anaconda3/envs/chat_demo

进入已经创建好的虚拟环境:conda activate 环境名称 或 source activate 环境名称

$ conda activate chat_demo (chat_demo) $ python --version Python 3.11.7

3、安装pytorch

~$ pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118 -i https://pypi.tuna.tsinghua.edu.cn/simple

Looking in indexes: https://pypi.tuna.tsinghua.edu.cn/simple

Collecting torch

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/2c/df/5810707da6f2fd4be57f0cc417987c0fa16a2eecf0b1b71f82ea555dc619/torch-2.2.1-cp311-cp311-manylinux1_x86_64.whl (755.6 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 755.6/755.6 MB 2.4 MB/s eta 0:00:00

Collecting torchvision

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/3a/49/12fc5188602c68a789a0fdaee63d176a71ad5c1e34d25aeb8554abe46089/torchvision-0.17.1-cp311-cp311-manylinux1_x86_64.whl (6.9 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 6.9/6.9 MB 6.6 MB/s eta 0:00:00

Collecting torchaudio

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/a6/57/ccebdda4db80e384166c70d8645fa998637051b3b19aca1fd8de80602afb/torchaudio-2.2.1-cp311-cp311-manylinux1_x86_64.whl (3.3 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 3.3/3.3 MB 6.7 MB/s eta 0:00:00

Collecting filelock (from torch)

Using cached https://pypi.tuna.tsinghua.edu.cn/packages/81/54/84d42a0bee35edba99dee7b59a8d4970eccdd44b99fe728ed912106fc781/filelock-3.13.1-py3-none-any.whl (11 kB)

Collecting typing-extensions>=4.8.0 (from torch)

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/f9/de/dc04a3ea60b22624b51c703a84bbe0184abcd1d0b9bc8074b5d6b7ab90bb/typing_extensions-4.10.0-py3-none-any.whl (33 kB)

Collecting sympy (from torch)

Using cached https://pypi.tuna.tsinghua.edu.cn/packages/d2/05/e6600db80270777c4a64238a98d442f0fd07cc8915be2a1c16da7f2b9e74/sympy-1.12-py3-none-any.whl (5.7 MB)

Collecting networkx (from torch)

Using cached https://pypi.tuna.tsinghua.edu.cn/packages/d5/f0/8fbc882ca80cf077f1b246c0e3c3465f7f415439bdea6b899f6b19f61f70/networkx-3.2.1-py3-none-any.whl (1.6 MB)

Collecting jinja2 (from torch)

Using cached https://pypi.tuna.tsinghua.edu.cn/packages/30/6d/6de6be2d02603ab56e72997708809e8a5b0fbfee080735109b40a3564843/Jinja2-3.1.3-py3-none-any.whl (133 kB)

Collecting fsspec (from torch)

Using cached https://pypi.tuna.tsinghua.edu.cn/packages/ad/30/2281c062222dc39328843bd1ddd30ff3005ef8e30b2fd09c4d2792766061/fsspec-2024.2.0-py3-none-any.whl (170 kB)

Collecting nvidia-cuda-nvrtc-cu12==12.1.105 (from torch)

Using cached https://pypi.tuna.tsinghua.edu.cn/packages/b6/9f/c64c03f49d6fbc56196664d05dba14e3a561038a81a638eeb47f4d4cfd48/nvidia_cuda_nvrtc_cu12-12.1.105-py3-none-manylinux1_x86_64.whl (23.7 MB)

Collecting nvidia-cuda-runtime-cu12==12.1.105 (from torch)

Using cached https://pypi.tuna.tsinghua.edu.cn/packages/eb/d5/c68b1d2cdfcc59e72e8a5949a37ddb22ae6cade80cd4a57a84d4c8b55472/nvidia_cuda_runtime_cu12-12.1.105-py3-none-manylinux1_x86_64.whl (823 kB)

Collecting nvidia-cuda-cupti-cu12==12.1.105 (from torch)

Using cached https://pypi.tuna.tsinghua.edu.cn/packages/7e/00/6b218edd739ecfc60524e585ba8e6b00554dd908de2c9c66c1af3e44e18d/nvidia_cuda_cupti_cu12-12.1.105-py3-none-manylinux1_x86_64.whl (14.1 MB)

Collecting nvidia-cudnn-cu12==8.9.2.26 (from torch)

Using cached https://pypi.tuna.tsinghua.edu.cn/packages/ff/74/a2e2be7fb83aaedec84f391f082cf765dfb635e7caa9b49065f73e4835d8/nvidia_cudnn_cu12-8.9.2.26-py3-none-manylinux1_x86_64.whl (731.7 MB)

Collecting nvidia-cublas-cu12==12.1.3.1 (from torch)

Using cached https://pypi.tuna.tsinghua.edu.cn/packages/37/6d/121efd7382d5b0284239f4ab1fc1590d86d34ed4a4a2fdb13b30ca8e5740/nvidia_cublas_cu12-12.1.3.1-py3-none-manylinux1_x86_64.whl (410.6 MB)

Collecting nvidia-cufft-cu12==11.0.2.54 (from torch)

Using cached https://pypi.tuna.tsinghua.edu.cn/packages/86/94/eb540db023ce1d162e7bea9f8f5aa781d57c65aed513c33ee9a5123ead4d/nvidia_cufft_cu12-11.0.2.54-py3-none-manylinux1_x86_64.whl (121.6 MB)

Collecting nvidia-curand-cu12==10.3.2.106 (from torch)

Using cached https://pypi.tuna.tsinghua.edu.cn/packages/44/31/4890b1c9abc496303412947fc7dcea3d14861720642b49e8ceed89636705/nvidia_curand_cu12-10.3.2.106-py3-none-manylinux1_x86_64.whl (56.5 MB)

Collecting nvidia-cusolver-cu12==11.4.5.107 (from torch)

Using cached https://pypi.tuna.tsinghua.edu.cn/packages/bc/1d/8de1e5c67099015c834315e333911273a8c6aaba78923dd1d1e25fc5f217/nvidia_cusolver_cu12-11.4.5.107-py3-none-manylinux1_x86_64.whl (124.2 MB)

Collecting nvidia-cusparse-cu12==12.1.0.106 (from torch)

Using cached https://pypi.tuna.tsinghua.edu.cn/packages/65/5b/cfaeebf25cd9fdec14338ccb16f6b2c4c7fa9163aefcf057d86b9cc248bb/nvidia_cusparse_cu12-12.1.0.106-py3-none-manylinux1_x86_64.whl (196.0 MB)

Collecting nvidia-nccl-cu12==2.19.3 (from torch)

Using cached https://pypi.tuna.tsinghua.edu.cn/packages/38/00/d0d4e48aef772ad5aebcf70b73028f88db6e5640b36c38e90445b7a57c45/nvidia_nccl_cu12-2.19.3-py3-none-manylinux1_x86_64.whl (166.0 MB)

Collecting nvidia-nvtx-cu12==12.1.105 (from torch)

Using cached https://pypi.tuna.tsinghua.edu.cn/packages/da/d3/8057f0587683ed2fcd4dbfbdfdfa807b9160b809976099d36b8f60d08f03/nvidia_nvtx_cu12-12.1.105-py3-none-manylinux1_x86_64.whl (99 kB)

Collecting triton==2.2.0 (from torch)

Using cached https://pypi.tuna.tsinghua.edu.cn/packages/bd/ac/3974caaa459bf2c3a244a84be8d17561f631f7d42af370fc311defeca2fb/triton-2.2.0-cp311-cp311-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (167.9 MB)

Collecting nvidia-nvjitlink-cu12 (from nvidia-cusolver-cu12==11.4.5.107->torch)

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/58/d1/d1c80553f9d5d07b6072bc132607d75a0ef3600e28e1890e11c0f55d7346/nvidia_nvjitlink_cu12-12.4.99-py3-none-manylinux2014_x86_64.whl (21.1 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 21.1/21.1 MB 6.6 MB/s eta 0:00:00

Collecting numpy (from torchvision)

Using cached https://pypi.tuna.tsinghua.edu.cn/packages/3a/d0/edc009c27b406c4f9cbc79274d6e46d634d139075492ad055e3d68445925/numpy-1.26.4-cp311-cp311-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (18.3 MB)

Collecting pillow!=8.3.*,>=5.3.0 (from torchvision)

Using cached https://pypi.tuna.tsinghua.edu.cn/packages/66/9c/2e1877630eb298bbfd23f90deeec0a3f682a4163d5ca9f178937de57346c/pillow-10.2.0-cp311-cp311-manylinux_2_28_x86_64.whl (4.5 MB)

Collecting MarkupSafe>=2.0 (from jinja2->torch)

Using cached https://pypi.tuna.tsinghua.edu.cn/packages/97/18/c30da5e7a0e7f4603abfc6780574131221d9148f323752c2755d48abad30/MarkupSafe-2.1.5-cp311-cp311-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (28 kB)

Collecting mpmath>=0.19 (from sympy->torch)

Using cached https://pypi.tuna.tsinghua.edu.cn/packages/43/e3/7d92a15f894aa0c9c4b49b8ee9ac9850d6e63b03c9c32c0367a13ae62209/mpmath-1.3.0-py3-none-any.whl (536 kB)

Installing collected packages: mpmath, typing-extensions, sympy, pillow, nvidia-nvtx-cu12, nvidia-nvjitlink-cu12, nvidia-nccl-cu12, nvidia-curand-cu12, nvidia-cufft-cu12, nvidia-cuda-runtime-cu12, nvidia-cuda-nvrtc-cu12, nvidia-cuda-cupti-cu12, nvidia-cublas-cu12, numpy, networkx, MarkupSafe, fsspec, filelock, triton, nvidia-cusparse-cu12, nvidia-cudnn-cu12, jinja2, nvidia-cusolver-cu12, torch, torchvision, torchaudio

Successfully installed MarkupSafe-2.1.5 filelock-3.13.1 fsspec-2024.2.0 jinja2-3.1.3 mpmath-1.3.0 networkx-3.2.1 numpy-1.26.4 nvidia-cublas-cu12-12.1.3.1 nvidia-cuda-cupti-cu12-12.1.105 nvidia-cuda-nvrtc-cu12-12.1.105 nvidia-cuda-runtime-cu12-12.1.105 nvidia-cudnn-cu12-8.9.2.26 nvidia-cufft-cu12-11.0.2.54 nvidia-curand-cu12-10.3.2.106 nvidia-cusolver-cu12-11.4.5.107 nvidia-cusparse-cu12-12.1.0.106 nvidia-nccl-cu12-2.19.3 nvidia-nvjitlink-cu12-12.4.99 nvidia-nvtx-cu12-12.1.105 pillow-10.2.0 sympy-1.12 torch-2.2.1 torchaudio-2.2.1 torchvision-0.17.1 triton-2.2.0 typing-extensions-4.10.0

验证是否安装成功:

~$ python

Python 3.11.7 (main, Dec 15 2023, 18:12:31) [GCC 11.2.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> import torch

>>> x = torch.rand(5,3)

>>> print(x)

tensor([[0.8278, 0.8746, 0.1025],

[0.7528, 0.6855, 0.7386],

[0.6271, 0.1371, 0.1849],

[0.4098, 0.3203, 0.7615],

[0.5088, 0.7645, 0.8044]])

4、拉取Langchain-Chatchat源代码

有两种方式获取源代码,一种是获取最新代码,一种是获取指定版本的源代码。

# 拉取仓库

git clone https://github.com/chatchat-space/Langchain-Chatchat.git

# 指定版本获取代码

git clone -b v0.2.9 https://github.com/chatchat-space/Langchain-Chatchat.git

在拉取源代码之前先安装 git

5、安装依赖包

cd Langchain-Chatchat pip3 install -r requirements.txt -i https://pypi.tuna.tsinghua.edu.cn/simple/

Found existing installation: triton 2.2.0

Uninstalling triton-2.2.0:

Successfully uninstalled triton-2.2.0

Attempting uninstall: pillow

Found existing installation: pillow 10.2.0

Uninstalling pillow-10.2.0:

Successfully uninstalled pillow-10.2.0

Attempting uninstall: nvidia-nccl-cu12

Found existing installation: nvidia-nccl-cu12 2.19.3

Uninstalling nvidia-nccl-cu12-2.19.3:

Successfully uninstalled nvidia-nccl-cu12-2.19.3

Attempting uninstall: numpy

Found existing installation: numpy 1.26.4

Uninstalling numpy-1.26.4:

Successfully uninstalled numpy-1.26.4

Attempting uninstall: torch

Found existing installation: torch 2.2.1

Uninstalling torch-2.2.1:

Successfully uninstalled torch-2.2.1

Attempting uninstall: torchvision

Found existing installation: torchvision 0.17.1

Uninstalling torchvision-0.17.1:

Successfully uninstalled torchvision-0.17.1

Attempting uninstall: torchaudio

Found existing installation: torchaudio 2.2.1

Uninstalling torchaudio-2.2.1:

Successfully uninstalled torchaudio-2.2.1

Successfully installed PyMuPDF-1.23.16 PyMuPDFb-1.23.9 SQLAlchemy-2.0.25 Shapely-2.0.3 XlsxWriter-3.2.0 accelerate-0.24.1 aiofiles-23.2.1 aiohttp-3.9.3 aioprometheus-23.12.0 aiosignal-1.3.1 altair-5.2.0 antlr4-python3-runtime-4.9.3 anyio-4.3.0 arxiv-2.1.0 attrs-23.2.0 backoff-2.2.1 beautifulsoup4-4.12.3 blinker-1.7.0 blis-0.7.11 brotli-1.1.0 cachetools-5.3.3 catalogue-2.0.10 certifi-2024.2.2 cffi-1.16.0 chardet-5.2.0 charset-normalizer-3.3.2 click-8.1.7 cloudpathlib-0.16.0 coloredlogs-15.0.1 confection-0.1.4 contourpy-1.2.0 cryptography-42.0.5 cycler-0.12.1 cymem-2.0.8 dataclasses-json-0.6.4 deepdiff-6.7.1 deprecated-1.2.14 deprecation-2.1.0 distro-1.9.0 duckduckgo-search-3.9.9 effdet-0.4.1 einops-0.7.0 emoji-2.10.1 et-xmlfile-1.1.0 faiss-cpu-1.7.4 fastapi-0.109.0 feedparser-6.0.10 filetype-1.2.0 flatbuffers-24.3.7 fonttools-4.49.0 frozenlist-1.4.1 fschat-0.2.35 gitdb-4.0.11 gitpython-3.1.42 greenlet-3.0.3 h11-0.14.0 h2-4.1.0 hpack-4.0.0 httpcore-1.0.4 httptools-0.6.1 httpx-0.26.0 httpx_sse-0.4.0 huggingface-hub-0.21.4 humanfriendly-10.0 hyperframe-6.0.1 idna-3.6 importlib-metadata-7.0.2 iniconfig-2.0.0 iopath-0.1.10 joblib-1.3.2 jsonpatch-1.33 jsonpath-python-1.0.6 jsonpointer-2.4 jsonschema-4.21.1 jsonschema-specifications-2023.12.1 kiwisolver-1.4.5 langchain-0.0.354 langchain-community-0.0.20 langchain-core-0.1.23 langchain-experimental-0.0.47 langcodes-3.3.0 langdetect-1.0.9 langsmith-0.0.87 layoutparser-0.3.4 llama-index-0.9.35 lxml-5.1.0 markdown-3.5.2 markdown-it-py-3.0.0 markdown2-2.4.13 markdownify-0.11.6 marshmallow-3.21.1 matplotlib-3.8.3 mdurl-0.1.2 metaphor-python-0.1.23 msg-parser-1.2.0 msgpack-1.0.8 multidict-6.0.5 murmurhash-1.0.10 mypy-extensions-1.0.0 nest-asyncio-1.6.0 nh3-0.2.15 ninja-1.11.1.1 nltk-3.8.1 numexpr-2.8.6 numpy-1.24.4 nvidia-nccl-cu12-2.18.1 olefile-0.47 omegaconf-2.3.0 onnx-1.15.0 onnxruntime-1.15.1 openai-1.9.0 opencv-python-4.9.0.80 openpyxl-3.1.2 ordered-set-4.1.0 orjson-3.9.15 packaging-23.2 pandas-2.0.3 pathlib-1.0.1 pdf2image-1.17.0 pdfminer.six-20231228 pdfplumber-0.11.0 pikepdf-8.4.1 pillow-9.5.0 pillow-heif-0.15.0 pluggy-1.4.0 portalocker-2.8.2 preshed-3.0.9 prompt-toolkit-3.0.43 protobuf-4.25.3 psutil-5.9.8 pyarrow-15.0.1 pyclipper-1.3.0.post5 pycocotools-2.0.7 pycparser-2.21 pydantic-1.10.13 pydeck-0.8.1b0 pygments-2.17.2 pyjwt-2.8.0 pypandoc-1.13 pyparsing-3.1.2 pypdf-4.1.0 pypdfium2-4.27.0 pytesseract-0.3.10 pytest-7.4.3 python-dateutil-2.9.0.post0 python-decouple-3.8 python-docx-1.1.0 python-dotenv-1.0.1 python-iso639-2024.2.7 python-magic-0.4.27 python-multipart-0.0.9 python-pptx-0.6.23 pytz-2024.1 pyyaml-6.0.1 quantile-python-1.1 rapidfuzz-3.6.2 rapidocr_onnxruntime-1.3.8 ray-2.9.3 referencing-0.33.0 regex-2023.12.25 requests-2.31.0 rich-13.7.1 rpds-py-0.18.0 safetensors-0.4.2 scikit-learn-1.4.1.post1 scipy-1.12.0 sentence_transformers-2.2.2 sentencepiece-0.2.0 sgmllib3k-1.0.0 shortuuid-1.0.12 simplejson-3.19.2 six-1.16.0 smart-open-6.4.0 smmap-5.0.1 sniffio-1.3.1 socksio-1.0.0 soupsieve-2.5 spacy-3.7.2 spacy-legacy-3.0.12 spacy-loggers-1.0.5 srsly-2.4.8 sse_starlette-1.8.2 starlette-0.35.0 streamlit-1.30.0 streamlit-aggrid-0.3.4.post3 streamlit-antd-components-0.3.1 streamlit-chatbox-1.1.11 streamlit-feedback-0.1.3 streamlit-modal-0.1.0 streamlit-option-menu-0.3.12 strsimpy-0.2.1 svgwrite-1.4.3 tabulate-0.9.0 tenacity-8.2.3 thinc-8.2.3 threadpoolctl-3.3.0 tiktoken-0.5.2 timm-0.9.16 tokenizers-0.15.2 toml-0.10.2 toolz-0.12.1 torch-2.1.2 torchaudio-2.1.2 torchvision-0.16.2 tornado-6.4 tqdm-4.66.1 transformers-4.37.2 transformers_stream_generator-0.0.4 triton-2.1.0 typer-0.9.0 typing-inspect-0.9.0 tzdata-2024.1 tzlocal-5.2 unstructured-0.12.5 unstructured-client-0.21.1 unstructured-inference-0.7.23 unstructured.pytesseract-0.3.12 urllib3-2.2.1 uvicorn-0.28.0 uvloop-0.19.0 validators-0.22.0 vllm-0.2.7 wasabi-1.1.2 watchdog-3.0.0 watchfiles-0.21.0 wavedrom-2.0.3.post3 wcwidth-0.2.13 weasel-0.3.4 websockets-12.0 wrapt-1.16.0 xformers-0.0.23.post1 xlrd-2.0.1 yarl-1.9.4 youtube-search-2.1.2 zipp-3.17.0

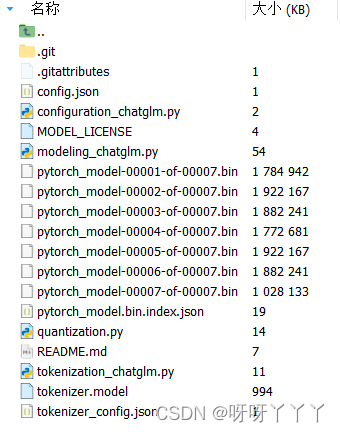

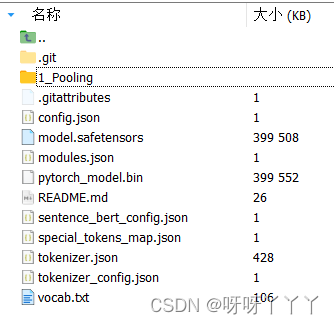

6、下载模型

下载两个模型:M3e-base 内置模型和 chatglm3-6b 模型。

sudo apt-get install git-lfs git clone https://gitee.com/hf-models/m3e-base.git git clone https://gitee.com/hf-models/chatglm-6b.git

如果需要上 huggingface.co 获取模型可能需要科学上网工具。

7、修改配置文件

批量修改配置文件名

批量复制configs目录下所有的配置文件,去掉example后缀:

# cd Langchain-Chatchat # 批量复制configs目录下所有配置文件,去掉example python copy_config_example.py

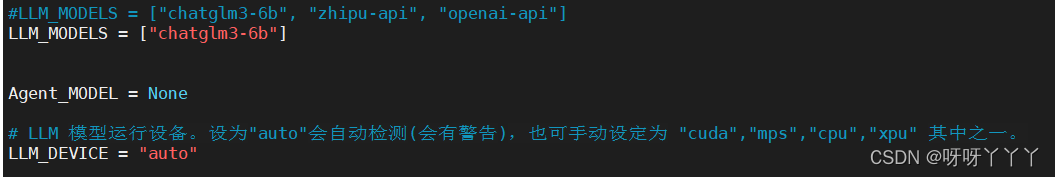

修改model_config.py文件

修改m3e-base的模型本地路径(注意是双反斜杠"\\"):

修改server_config.py

0.2.6之前版本,需要修改0.0.0.0为127.0.0.1不然会报错。

# 各服务器默认绑定host。如改为"0.0.0.0"需要修改下方所有XX_SERVER的host DEFAULT_BIND_HOST = "127.0.0.1" if sys.platform != "win32" else "127.0.0.1"

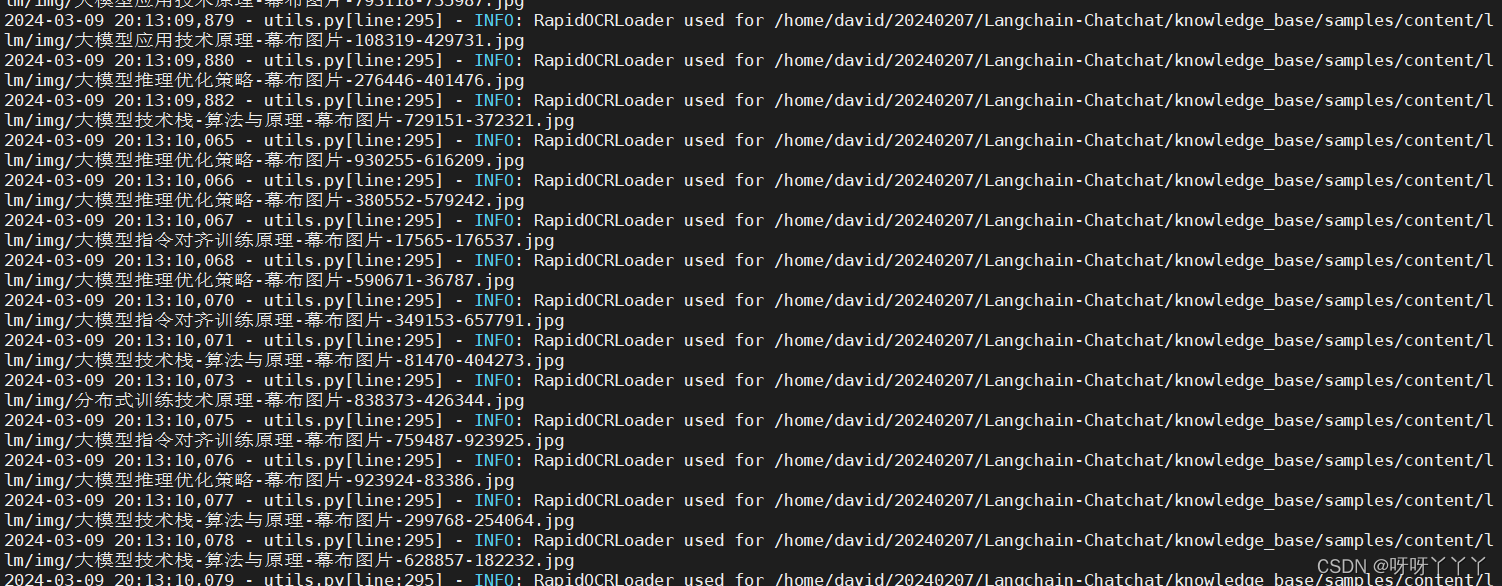

8、初始化数据库

python init_database.py --recreate-vs

配置里面模型的运行设置设置为 auto (还是建议用显卡跑)

9、一键启动项目

运行:

python startup.py -a

==============================Langchain-Chatchat Configuration==============================

操作系统:Linux-5.15.133.1-microsoft-standard-WSL2-x86_64-with-glibc2.35.

python版本:3.11.7 (main, Dec 15 2023, 18:12:31) [GCC 11.2.0]

项目版本:v0.2.10

langchain版本:0.0.354. fastchat版本:0.2.35

当前使用的分词器:ChineseRecursiveTextSplitter

当前启动的LLM模型:['chatglm3-6b'] @ cuda

{'device': 'cuda',

'host': '127.0.0.1',

'infer_turbo': False,

'model_path': 'model/chatglm3-6b',

'model_path_exists': True,

'port': 20002}

当前Embbedings模型: m3e-base @ cuda

==============================Langchain-Chatchat Configuration==============================

2024-03-09 20:18:03,837 - startup.py[line:655] - INFO: 正在启动服务:

2024-03-09 20:18:03,838 - startup.py[line:656] - INFO: 如需查看 llm_api 日志,请前往 /home/david/20240207/Langchain-Chatchat/logs

/home/david/anaconda3/envs/chat_demo/lib/python3.11/site-packages/langchain_core/_api/deprecation.py:117: LangChainDeprecationWarning: 模型启动功能将于 Langchain-Chatchat 0.3.x重写,支持更多模式和加速启动,0.2.x中相关功能将废弃

warn_deprecated(

2024-03-09 20:18:10 | ERROR | stderr | INFO: Started server process [329625]

2024-03-09 20:18:10 | ERROR | stderr | INFO: Waiting for application startup.

2024-03-09 20:18:10 | ERROR | stderr | INFO: Application startup complete.

2024-03-09 20:18:10 | ERROR | stderr | INFO: Uvicorn running on http://127.0.0.1:20000 (Press CTRL+C to quit)

2024-03-09 20:18:10 | INFO | model_worker | Loading the model ['chatglm3-6b'] on worker 7b784767 ...

Loading checkpoint shards: 0%| | 0/7 [00:00