大数据实训(三)——MapReduce编程实例:词频统计

#MapReduce#YARN#hdfs#IDEA#JDK1.8

实验三:Mapreduce词频统计

3.1启动hadoop服务,输入命令:

start-all.sh

3.2在export目录下,创建wordcount目录,在里面创建words.txt文件,向words.txt输入下面内容。

[root@bogon~]# mkdir -p /export/wordcount [root@bogon~]# cd /export/wordcount/ [root@bogon~]# vi words.txt [root@bogon~]# cat words.txt

3.3编辑结束,上传文件到HDFS指定目录

创建/wordcount/input目录,执行命令:

hdfs dfs -mkdir -p /wordcount/input

3.4将在本地/export/wordcount/目录下的words.txt文件,上传到HDFS的/wordcount/input目录,输入命令:

hdfs dfs -put /export/wordcount/words.txt /wordcount/input

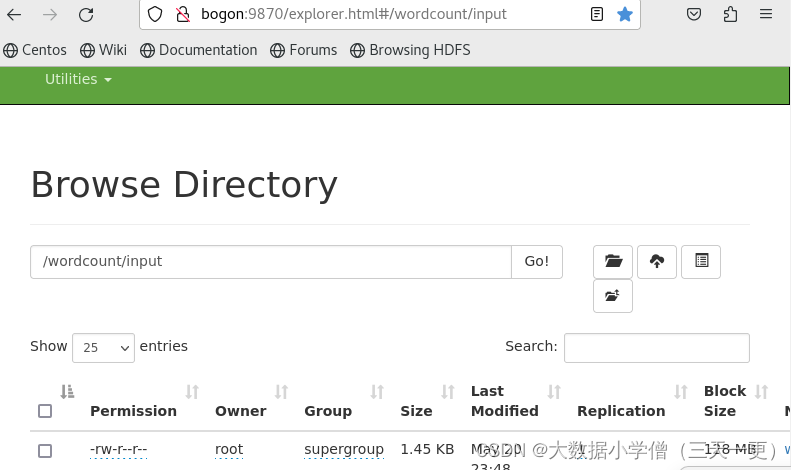

在Hadoop WebUI界面查看目录是否创建成功

3.5使用IDEA创建Maven项目MRWordCount

在pom.xml文件里添加hadoop和junit依赖,内容为:

org.apache.hadoop

hadoop-client

3.3.4

junit

junit

4.13.2

3.6创建日志文件:在resources目录里创建log4j.properties文件

log4j.rootLogger=ERROR, stdout, logfile log4j.appender.stdout=org.apache.log4j.ConsoleAppender log4j.appender.stdout.layout=org.apache.log4j.PatternLayout log4j.appender.stdout.layout.ConversionPattern=%d %p [%c] - %m%n log4j.appender.logfile=org.apache.log4j.FileAppender log4j.appender.logfile.File=target/wordcount.log log4j.appender.logfile.layout=org.apache.log4j.PatternLayout log4j.appender.logfile.layout.ConversionPattern=%d %p [%c] - %m%n

3.7创建词频统计映射器类

(1)创建net.army.mr包,在弹出的new package对话框中输入net.army.mr

(2)在net.army.mr包下创建WordCountMapper类

package net.army.mr;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.io.Text;

import java.io.IOException;

/**

* 功能:词频统计映射器类

*/

public class WordCountMapper extends Mapper {

@Override

protected void map(LongWritable key, Text value, Context context)

throws IOException, InterruptedException {

// 获取行内容

String line = value.toString();

// 按空格拆分成单词数组

String[] words = line.split(" ");

// 遍历单词数组,生成输出键值对

for (String word : words) {

context.write(new Text(word), new IntWritable(1));

}

}

}

3.8创建WordCountReducer类

package net.army.mr;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

/**

* 功能:词频统计归并类

*/

public class WordCountReducer extends Reducer {

@Override

protected void reduce(Text key, Iterable values, Context context)

throws IOException, InterruptedException {

// 定义键(单词)出现次数

int count = 0;

// 遍历输入值迭代器

for (IntWritable value : values) {

count = count + value.get(); // 针对此案例,可以写为count++;

}

// 生成新的键,格式为(word,count)

String newKey = "(" + key.toString() + "," + count + ")";

// 输出新的键值对

context.write(new Text(newKey), NullWritable.get());

}

}

3.9创建WordCountDriver类

package net.army.mr;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FileStatus;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.net.URI;

/**

* 功能:词频统计驱动器类

*/

public class WordCountDriver {

public static void main(String[] args) throws Exception {

// 创建配置对象

Configuration conf = new Configuration();

// 设置客户端使用数据节点主机名属性

conf.set("dfs.client.use.datanode.hostname", "true");

// 获取作业实例

Job job = Job.getInstance(conf);

// 设置作业启动类

job.setJarByClass(WordCountDriver.class);

// 设置Mapper类

job.setMapperClass(WordCountMapper.class);

// 设置map任务输出键类型

job.setMapOutputKeyClass(Text.class);

// 设置map任务输出值类型

job.setMapOutputValueClass(IntWritable.class);

// 设置Reducer类

job.setReducerClass(WordCountReducer.class);

// 设置reduce任务输出键类型

job.setOutputKeyClass(Text.class);

// 设置reduce任务输出值类型

job.setOutputValueClass(NullWritable.class);

// 定义uri字符串

String uri = "hdfs://bogon:9000";

// 创建输入目录

Path inputPath = new Path(uri + "/wordcount/input");

// 创建输出目录

Path outputPath = new Path(uri + "/wordcount/output");

// 获取文件系统

FileSystem fs = FileSystem.get(new URI(uri), conf);

// 删除输出目录(第二个参数设置是否递归)

fs.delete(outputPath, true);

// 给作业添加输入目录(允许多个)

FileInputFormat.addInputPath(job, inputPath);

// 给作业设置输出目录(只能一个)

FileOutputFormat.setOutputPath(job, outputPath);

// 等待作业完成

job.waitForCompletion(true);

// 输出统计结果

System.out.println("======统计结果======");

FileStatus[] fileStatuses = fs.listStatus(outputPath);

for (int i = 1; i

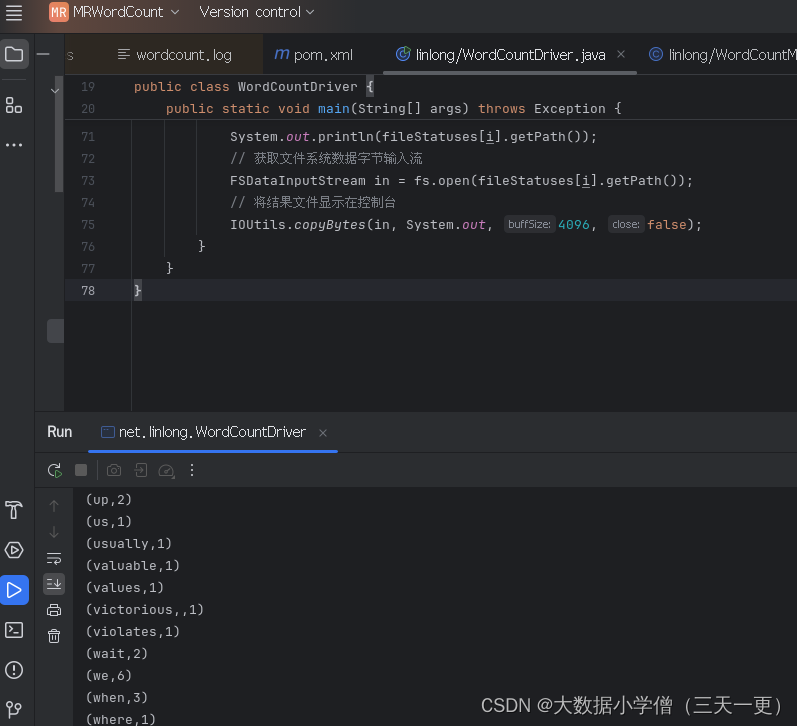

3.10运行词频统计驱动器类WordCountDriver,查看结果

文章版权声明:除非注明,否则均为主机测评原创文章,转载或复制请以超链接形式并注明出处。