第P10周:Pytorch实现车牌识别

第P10周:Pytorch实现车牌识别

- 🍨 本文为🔗365天深度学习训练营 中的学习记录博客

- 🍖 原作者:K同学啊

在之前的案例中,我们多是使用datasets.ImageFolder函数直接导入已经分类好的数据集形成Dataset,然后使用DataLoader加载Dataset,但是如果对无法分类的数据集,我们如何导入,并进行识别呢?

本周我将自定义一个MyDataset加载车牌数据集并完成车牌识别

🍺 基础要求:

- 学习并理解本文

🍺 拔高要求:

- 对单张车牌进行识别

🏡我的环境:

- 语言环境:Python3.8

- 编译器:Jupyter Lab

- 深度学习环境:

- torch==2.2.2

- torchvision==0.17.2

前期准备

- 如果设备上支持GPU就使用GPU,否则使用CPU

- Mac上的GPU使用mps

from torchvision.transforms import transforms from torch.utils.data import DataLoader from torchvision import datasets import torchvision.models as models import torch.nn.functional as F import torch.nn as nn import torch,torchvision import os,PIL,pathlib,warnings warnings.filterwarnings("ignore") #忽略警告信息 # this ensures that the current MacOS version is at least 12.3+ print(torch.backends.mps.is_available()) # this ensures that the current current PyTorch installation was built with MPS activated. print(torch.backends.mps.is_built()) # 设置硬件设备,如果有GPU则使用,没有则使用cpu device = torch.device("mps" if torch.backends.mps.is_available() else "cpu") device # # 使用的是GPUTrue True device(type='mps')

一、导入数据

1.1. 获取类别名

import os,PIL,random,pathlib data_dir = './data/p10/015_licence_plate/' data_dir = pathlib.Path(data_dir) data_paths = list(data_dir.glob('*')) classeNames = [str(path).split("/")[-1].split("_")[1].split(".")[0] for path in data_paths] classeNames[:3]['沪G1CE81', '云G86LR6', '鄂U71R9F']

data_paths = list(data_dir.glob('*')) data_paths_str = [str(path) for path in data_paths] data_paths_str[:3]['data/p10/015_licence_plate/000008250_沪G1CE81.jpg', 'data/p10/015_licence_plate/000015082_云G86LR6.jpg', 'data/p10/015_licence_plate/000004721_鄂U71R9F.jpg']

1.2. 数据可视化

import os,PIL,random,pathlib import matplotlib.pyplot as plt plt.figure(figsize=(14,5)) plt.suptitle("数据示例",fontsize=15) for i in range(18): plt.subplot(3,6,i+1) # 显示图片 images = plt.imread(data_paths_str[i]) plt.imshow(images) plt.show()1.3. 标签数字化

import numpy as np char_enum = ["京","沪","津","渝","冀","晋","蒙","辽","吉","黑","苏","浙","皖","闽","赣","鲁",\ "豫","鄂","湘","粤","桂","琼","川","贵","云","藏","陕","甘","青","宁","新","军","使"] number = [str(i) for i in range(0, 10)] # 0 到 9 的数字 alphabet = [chr(i) for i in range(65, 91)] # A 到 Z 的字母 char_set = char_enum + number + alphabet char_set_len = len(char_set) label_name_len = len(classeNames[0]) # 将字符串数字化 def text2vec(text): vector = np.zeros([label_name_len, char_set_len]) for i, c in enumerate(text): idx = char_set.index(c) vector[i][idx] = 1.0 return vector all_labels = [text2vec(i) for i in classeNames]1.4. 加载数据文件

import os import pandas as pd from torchvision.io import read_image from torch.utils.data import Dataset import torch.utils.data as data from PIL import Image class MyDataset(data.Dataset): def __init__(self, all_labels, data_paths_str, transform): self.img_labels = all_labels # 获取标签信息 self.img_dir = data_paths_str # 图像目录路径 self.transform = transform # 目标转换函数 def __len__(self): return len(self.img_labels) def __getitem__(self, index): image = Image.open(self.img_dir[index]).convert('RGB')#plt.imread(self.img_dir[index]) # 使用 torchvision.io.read_image 读取图像 label = self.img_labels[index] # 获取图像对应的标签 if self.transform: image = self.transform(image) return image, label # 返回图像和标签# 关于transforms.Compose的更多介绍可以参考:https://blog.csdn.net/qq_38251616/article/details/124878863 train_transforms = transforms.Compose([ transforms.Resize([224, 224]), # 将输入图片resize成统一尺寸 transforms.ToTensor(), # 将PIL Image或numpy.ndarray转换为tensor,并归一化到[0,1]之间 transforms.Normalize( # 标准化处理-->转换为标准正太分布(高斯分布),使模型更容易收敛 mean=[0.485, 0.456, 0.406], std =[0.229, 0.224, 0.225]) # 其中 mean=[0.485,0.456,0.406]与std=[0.229,0.224,0.225] 从数据集中随机抽样计算得到的。 ]) total_data = MyDataset(all_labels, data_paths_str, train_transforms) total_data1.5. 划分数据

train_size = int(0.8 * len(total_data)) test_size = len(total_data) - train_size train_dataset, test_dataset = torch.utils.data.random_split(total_data, [train_size, test_size]) train_size,test_size

(10940, 2735)

train_loader = torch.utils.data.DataLoader(train_dataset, batch_size=16, shuffle=True) test_loader = torch.utils.data.DataLoader(test_dataset, batch_size=16, shuffle=True) print("The number of images in a training set is: ", len(train_loader)*16) print("The number of images in a test set is: ", len(test_loader)*16) print("The number of batches per epoch is: ", len(train_loader))The number of images in a training set is: 10944 The number of images in a test set is: 2736 The number of batches per epoch is: 684

for X, y in test_loader: print("Shape of X [N, C, H, W]: ", X.shape) print("Shape of y: ", y.shape, y.dtype) breakShape of X [N, C, H, W]: torch.Size([16, 3, 224, 224]) Shape of y: torch.Size([16, 7, 69]) torch.float64

二、自建模型

2.1. 搭建模型

class Network_bn(nn.Module): def __init__(self): super(Network_bn, self).__init__() """ nn.Conv2d()函数: 第一个参数(in_channels)是输入的channel数量 第二个参数(out_channels)是输出的channel数量 第三个参数(kernel_size)是卷积核大小 第四个参数(stride)是步长,默认为1 第五个参数(padding)是填充大小,默认为0 """ self.conv1 = nn.Conv2d(in_channels=3, out_channels=12, kernel_size=5, stride=1, padding=0) self.bn1 = nn.BatchNorm2d(12) self.conv2 = nn.Conv2d(in_channels=12, out_channels=12, kernel_size=5, stride=1, padding=0) self.bn2 = nn.BatchNorm2d(12) self.pool = nn.MaxPool2d(2,2) self.conv4 = nn.Conv2d(in_channels=12, out_channels=24, kernel_size=5, stride=1, padding=0) self.bn4 = nn.BatchNorm2d(24) self.conv5 = nn.Conv2d(in_channels=24, out_channels=24, kernel_size=5, stride=1, padding=0) self.bn5 = nn.BatchNorm2d(24) self.fc1 = nn.Linear(24*50*50, label_name_len*char_set_len) self.reshape = Reshape([label_name_len,char_set_len]) def forward(self, x): x = F.relu(self.bn1(self.conv1(x))) x = F.relu(self.bn2(self.conv2(x))) x = self.pool(x) x = F.relu(self.bn4(self.conv4(x))) x = F.relu(self.bn5(self.conv5(x))) x = self.pool(x) x = x.view(-1, 24*50*50) x = self.fc1(x) # 最终reshape x = self.reshape(x) return x # 定义Reshape层 class Reshape(nn.Module): def __init__(self, shape): super(Reshape, self).__init__() self.shape = shape def forward(self, x): return x.view(x.size(0), *self.shape) print("Using {} device".format(device)) model = Network_bn().to(device) modelUsing mps device Network_bn( (conv1): Conv2d(3, 12, kernel_size=(5, 5), stride=(1, 1)) (bn1): BatchNorm2d(12, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv2): Conv2d(12, 12, kernel_size=(5, 5), stride=(1, 1)) (bn2): BatchNorm2d(12, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (pool): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False) (conv4): Conv2d(12, 24, kernel_size=(5, 5), stride=(1, 1)) (bn4): BatchNorm2d(24, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv5): Conv2d(24, 24, kernel_size=(5, 5), stride=(1, 1)) (bn5): BatchNorm2d(24, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (fc1): Linear(in_features=60000, out_features=483, bias=True) (reshape): Reshape() )

2.2. 查看模型详情

!pip install torchsummary

Looking in indexes: https://mirrors.aliyun.com/pypi/simple Requirement already satisfied: torchsummary in /Users/henry/src/miniconda3/lib/python3.8/site-packages (1.5.1)

# 统计模型参数量以及其他指标 import torchsummary as summary summary.summary(model.to("cpu"), (3, 224, 224))---------------------------------------------------------------- Layer (type) Output Shape Param # ================================================================ Conv2d-1 [-1, 12, 220, 220] 912 BatchNorm2d-2 [-1, 12, 220, 220] 24 Conv2d-3 [-1, 12, 216, 216] 3,612 BatchNorm2d-4 [-1, 12, 216, 216] 24 MaxPool2d-5 [-1, 12, 108, 108] 0 Conv2d-6 [-1, 24, 104, 104] 7,224 BatchNorm2d-7 [-1, 24, 104, 104] 48 Conv2d-8 [-1, 24, 100, 100] 14,424 BatchNorm2d-9 [-1, 24, 100, 100] 48 MaxPool2d-10 [-1, 24, 50, 50] 0 Linear-11 [-1, 483] 28,980,483 Reshape-12 [-1, 7, 69] 0 ================================================================ Total params: 29,006,799 Trainable params: 29,006,799 Non-trainable params: 0 ---------------------------------------------------------------- Input size (MB): 0.57 Forward/backward pass size (MB): 26.56 Params size (MB): 110.65 Estimated Total Size (MB): 137.79 ----------------------------------------------------------------注意对比观察模型的输出[-1, 7, 69],我们之前的网络结构输出都是[-1, 7]、[-1, 2]、[-1, 4]这样的二维数据,如果要求模型输出结果是多维数据,那么本案例将是很好的示例。

📮提问:[-1, 7, 69]中的-1是什么意思?

在神经网络中,如果我们不确定一个维度的大小,但是希望在计算中自动推断它,可以使用 -1。这个-1告诉 PyTorch 在计算中自动推断这个维度的大小,以确保其他维度的尺寸不变,并且能够保持张量的总大小不变。

例如,[-1, 7, 69]表示这个张量的形状是一个三维张量,其中第一个维度的大小是不确定的,第二维大小为7,第三大小分别为69。-1的作用是使得总的张量大小等于7 * 69,以适应实际的输入数据大小。

在实际的使用中,通常-1用在批处理维度上,因为在训练过程中,批处理大小可能会有所不同。使用-1可以使模型适应不同大小的批处理输入数据。

三、 训练模型

3.1. 优化器与损失函数

optimizer = torch.optim.Adam(model.parameters(), lr=1e-4, weight_decay=0.0001) loss_model = nn.CrossEntropyLoss()本周任务之一:在下面的代码中我对loss进行了统计更新,请补充acc统计更新部分,即获取每一次测试的ACC值。

任务提示:pred.shape与y.shape是:[batch, 7, 69],在进行acc计算时需注意~

from torch.autograd import Variable def test(model, test_loader, loss_model): size = len(test_loader.dataset) num_batches = len(test_loader) model.eval() test_loss, correct = 0, 0 with torch.no_grad(): for X, y in test_loader: X, y = X.to(device), y.to(device) pred = model(X) test_loss += loss_model(pred, y).item() test_loss /= num_batches print(f"Avg loss: {test_loss:>8f} \n") return correct,test_loss def train(model,train_loader,loss_model,optimizer): model=model.to(device) model.train() for i, (images, labels) in enumerate(train_loader, 0): #0是标起始位置的值。 images = Variable(images.to(device)) labels = Variable(labels.to(device)) optimizer.zero_grad() outputs = model(images) loss = loss_model(outputs, labels) loss.backward() optimizer.step() if i % 1000 == 0: print('[%5d] loss: %.3f' % (i, loss))3.2. 模型的训练

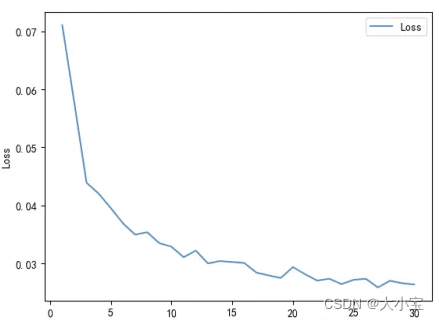

test_acc_list = [] test_loss_list = [] epochs = 30 for t in range(epochs): print(f"Epoch {t+1}\n--device-----------------------------") train(model,train_loader,loss_model,optimizer) test_acc,test_loss = test(model, test_loader, loss_model) test_acc_list.append(test_acc) test_loss_list.append(test_loss) print("Done!")Epoch 1 ------------------------------- [ 0] loss: 0.213 Avg loss: 0.071063 Epoch 2 ------------------------------- [ 0] loss: 0.033 Avg loss: 0.057604 ...... Epoch 30 ------------------------------- [ 0] loss: 0.014 Avg loss: 0.026364 Done!

四、 结果分析

import numpy as np import matplotlib.pyplot as plt x = [i for i in range(1,31)] plt.plot(x, test_loss_list, label="Loss", alpha=0.8) plt.xlabel("Epoch") plt.ylabel("Loss") plt.legend() plt.show()

文章版权声明:除非注明,否则均为主机测评原创文章,转载或复制请以超链接形式并注明出处。