SQOOP安装与使用

SQOOP安装及使用

文章目录

- SQOOP安装及使用

- SQOOP安装

- 1、上传并解压

- 2、修改配置文件

- 3、修改环境变量

- 4、添加MySQL连接驱动

- 5、测试

- 准备MySQL数据

- 登录MySQL数据库

- 创建student数据库

- 切换数据库并导入数据

- 另外一种导入数据的方式

- 使用Navicat运行SQL文件

- 导出MySQL数据库

- import

- MySQLToHDFS

- 编写脚本,保存为MySQLToHDFS.conf

- 执行脚本

- 注意事项:

- MySQLToHive

- 编写脚本,并保存为MySQLToHIVE.conf文件

- 在Hive中创建testsqoop库

- 执行脚本

- --direct

- -e参数的使用

- MySQLToHBase

- 编写脚本,并保存为MySQLToHBase.conf

- 在HBase中创建student表

- 执行脚本

- export

- HDFSToMySQL

- 编写脚本,并保存为HDFSToMySQL.conf

- 先清空MySQL student表中的数据,不然会造成主键冲突

- 执行脚本

- 查看sqoop help

- 分区导入

- 增量导入

SQOOP安装

1、上传并解压

tar -zxvf sqoop-1.4.7_hadoop3.X.tar.gz

2、修改配置文件

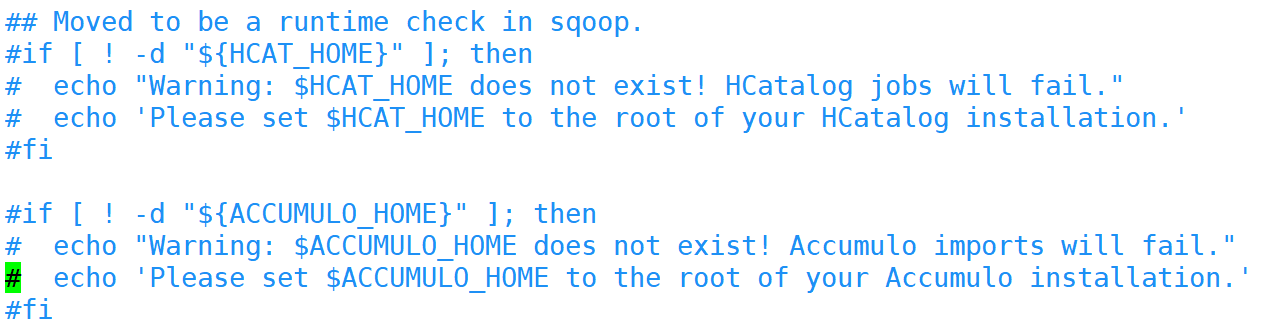

# 切换到sqoop配置文件目录 cd /usr/local/soft/sqoop-1.4.7/conf # 复制配置文件并重命名 cp sqoop-env-template.sh sqoop-env.sh # vim sqoop-env.sh 编辑配置文件,并加入以下内容 export HADOOP_COMMON_HOME=/usr/local/soft/hadoop-3.1.1 export HADOOP_MAPRED_HOME=/usr/local/soft/hadoop-3.1.1 # 切换到bin目录 cd /usr/local/soft/sqoop-1.4.7/bin # vim configure-sqoop 修改配置文件,注释掉没用的内容(就是为了去掉警告信息)

3、修改环境变量

vim /etc/profile # 将sqoop的目录加入环境变量

4、添加MySQL连接驱动

# 从HIVE中复制MySQL连接驱动到$SQOOP_HOME/lib cp /usr/local/soft/hive-1.2.1/lib/mysql-connector-java-5.1.49.jar /usr/local/soft/sqoop-1.4.7/lib/

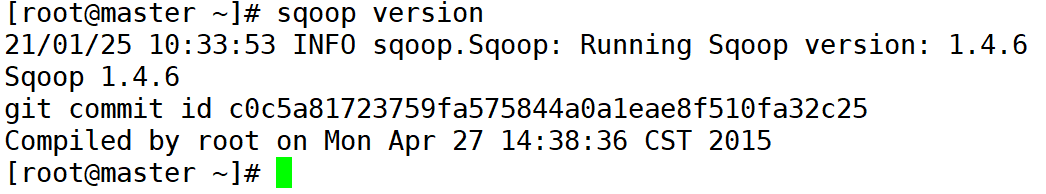

5、测试

# 打印sqoop版本 sqoop version

# 测试MySQL连通性 sqoop list-databases -connect jdbc:mysql://master:3306?useSSL=false -username root -password 123456

准备MySQL数据

登录MySQL数据库

mysql -u root -p123456;

创建student数据库

create database student;

切换数据库并导入数据

# mysql shell中执行 use student; source /usr/local/soft/shell/student.sql;

另外一种导入数据的方式

# linux shell中执行 mysql -u root -p123456 student1,那么需要结合--split-by参数,指定分割键,以确定每个map任务到底读取哪一部分数据,最好指定数值型的列,最好指定主键(或者分布均匀的列=>避免每个map任务处理的数据量差别过大)

3、如果指定的分割键数据分布不均,可能导致数据倾斜问题

4、分割的键最好指定数值型的,而且字段的类型为int、bigint这样的数值型

5、编写脚本的时候,注意:例如:--username参数,参数值不能和参数名同一行

--username root // 错误的 // 应该分成两行 --username root

6、运行的时候会报错InterruptedException,hadoop2.7.6自带的问题,忽略即可

21/01/25 14:32:32 WARN hdfs.DFSClient: Caught exception java.lang.InterruptedException at java.lang.Object.wait(Native Method) at java.lang.Thread.join(Thread.java:1252) at java.lang.Thread.join(Thread.java:1326) at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.closeResponder(DFSOutputStream.java:716) at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.endBlock(DFSOutputStream.java:476) at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.run(DFSOutputStream.java:652)

7、实际上sqoop在读取mysql数据的时候,用的是JDBC的方式,所以当数据量大的时候,效率不是很高

8、sqoop底层通过MapReduce完成数据导入导出,只需要Map任务,不需要Reduce任务

9、每个Map任务会生成一个文件

MySQLToHive

先会将MySQL的数据导出来并在HDFS上找个目录临时存放,默认为:/user/用户名/表名

然后再将数据加载到Hive中,加载完成后,会将临时存放的目录删除

编写脚本,并保存为MySQLToHIVE.conf文件

import --connect jdbc:mysql://master:3306/student?useSSL=false --username root --password 123456 --table score --fields-terminated-by "\t" --lines-terminated-by "\n" --m 3 --split-by student_id --hive-import --hive-overwrite --create-hive-table --hive-database bigdata --hive-table score_sqoop --delete-target-dir

在Hive中创建testsqoop库

hive> create database testsqoop;

如果重新导入表存在,那么就需要将–create-hive-table 选项去除

如果遇到如下错误

22/04/18 09:08:42 ERROR tool.ImportTool: Import failed: java.io.IOException: java.la ng.ClassNotFoundException: org.apache.hadoop.hive.conf.HiveConf at org.apache.sqoop.hive.HiveConfig.getHiveConf(HiveConfig.java:50) at org.apache.sqoop.hive.HiveImport.getHiveArgs(HiveImport.java:392) at org.apache.sqoop.hive.HiveImport.executeExternalHiveScript(HiveImport.jav a:379) at org.apache.sqoop.hive.HiveImport.executeScript(HiveImport.java:337) at org.apache.sqoop.hive.HiveImport.importTable(HiveImport.java:241) at org.apache.sqoop.tool.ImportTool.importTable(ImportTool.java:537) at org.apache.sqoop.tool.ImportTool.run(ImportTool.java:628) at org.apache.sqoop.Sqoop.run(Sqoop.java:147) at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70) at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:183) at org.apache.sqoop.Sqoop.runTool(Sqoop.java:234) at org.apache.sqoop.Sqoop.runTool(Sqoop.java:243) at org.apache.sqoop.Sqoop.main(Sqoop.java:252) Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.hive.conf.HiveConf at java.net.URLClassLoader.findClass(URLClassLoader.java:381) at java.lang.ClassLoader.loadClass(ClassLoader.java:424) at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:349) at java.lang.ClassLoader.loadClass(ClassLoader.java:357) at java.lang.Class.forName0(Native Method) at java.lang.Class.forName(Class.java:264) at org.apache.sqoop.hive.HiveConfig.getHiveConf(HiveConfig.java:44) ... 12 more解决办法:

cp /usr/local/soft/hive-1.2.1/lib/hive-common-1.2.1.jar /usr/local/soft/sqoop-1.4.7/lib/

Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/hadoop/hive/shims/ShimLoader at org.apache.hadoop.hive.conf.HiveConf$ConfVars.(HiveConf.java:368) at org.apache.hadoop.hive.conf.HiveConf.(HiveConf.java:105) at java.lang.Class.forName0(Native Method) at java.lang.Class.forName(Class.java:264) at org.apache.sqoop.hive.HiveConfig.getHiveConf(HiveConfig.java:44) at org.apache.sqoop.hive.HiveImport.getHiveArgs(HiveImport.java:392) at org.apache.sqoop.hive.HiveImport.executeExternalHiveScript(HiveImport.java:379) at org.apache.sqoop.hive.HiveImport.executeScript(HiveImport.java:337) at org.apache.sqoop.hive.HiveImport.importTable(HiveImport.java:241) at org.apache.sqoop.tool.ImportTool.importTable(ImportTool.java:537) at org.apache.sqoop.tool.ImportTool.run(ImportTool.java:628) at org.apache.sqoop.Sqoop.run(Sqoop.java:147) at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70) at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:183) at org.apache.sqoop.Sqoop.runTool(Sqoop.java:234) at org.apache.sqoop.Sqoop.runTool(Sqoop.java:243) at org.apache.sqoop.Sqoop.main(Sqoop.java:252) Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.hive.shims.ShimLoader at java.net.URLClassLoader.findClass(URLClassLoader.java:381) at java.lang.ClassLoader.loadClass(ClassLoader.java:424) at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:349) at java.lang.ClassLoader.loadClass(ClassLoader.java:357) ... 17 morecp /usr/local/soft/hive-1.2.1/lib/hive-shims* /usr/local/soft/sqoop-1.4.7/lib/

执行脚本

sqoop --options-file MySQLToHIVE.conf

–direct

加上这个参数,可以在导出MySQL数据的时候,使用MySQL提供的导出工具mysqldump,加快导出速度,提高效率

错误信息

22/04/18 09:42:33 INFO mapreduce.Job: Task Id : attempt_1650084984186_0048_m_000002_2, Status : FAILED Error: java.io.IOException: Cannot run program "mysqldump": error=2, 没有那个文件或目录 at java.lang.ProcessBuilder.start(ProcessBuilder.java:1048) at java.lang.Runtime.exec(Runtime.java:620) at java.lang.Runtime.exec(Runtime.java:485) at org.apache.sqoop.mapreduce.MySQLDumpMapper.map(MySQLDumpMapper.java:405) at org.apache.sqoop.mapreduce.MySQLDumpMapper.map(MySQLDumpMapper.java:49) at org.apache.hadoop.mapreduce.Mapper.run(Mapper.java:146) at org.apache.hadoop.mapred.MapTask.runNewMapper(MapTask.java:787) at org.apache.hadoop.mapred.MapTask.run(MapTask.java:341) at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:164) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:422) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1758) at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:158) Caused by: java.io.IOException: error=2, 没有那个文件或目录 at java.lang.UNIXProcess.forkAndExec(Native Method) at java.lang.UNIXProcess.(UNIXProcess.java:247) at java.lang.ProcessImpl.start(ProcessImpl.java:134) at java.lang.ProcessBuilder.start(ProcessBuilder.java:1029) ... 12 more解决办法:

需要将master上的/usr/bin/mysqldump分发至 node1、node2的/usr/bin目录下

scp /usr/bin/mysqldump node1:/usr/bin/ scp /usr/bin/mysqldump node2:/usr/bin/

-e参数的使用

import --connect jdbc:mysql://master:3306/student?useSSL=false --username root --password 123456 --m 2 --split-by student_id --e "select * from score where student_id=1500100011 and $CONDITIONS" --fields-terminated-by "\t" --lines-terminated-by "\n" --target-dir /testQ --hive-import --hive-overwrite --create-hive-table --hive-database testsqoop --hive-table score2

分析导入日志:

22/04/18 10:24:34 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset. 22/04/18 10:24:34 INFO tool.CodeGenTool: Beginning code generation Mon Apr 18 10:24:34 CST 2022 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification. 22/04/18 10:24:34 INFO manager.SqlManager: Executing SQL statement: select * from score where student_id=1500100011 and (1 = 0) 22/04/18 10:24:34 INFO manager.SqlManager: Executing SQL statement: select * from score where student_id=1500100011 and (1 = 0) 22/04/18 10:24:34 INFO manager.SqlManager: Executing SQL statement: select * from score where student_id=1500100011 and (1 = 0) 22/04/18 10:24:34 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /usr/local/soft/hadoop-2.7.6

解释:

其中的三条SQL语句是为了检查SQL语句是否正确

BoundingValsQuery: SELECT MIN(student_id), MAX(student_id) FROM (select * from score where student_id=1500100011 and (1 = 1) ) AS t1

MySQLToHBase

编写脚本,并保存为MySQLToHBase.conf

import --connect jdbc:mysql://master:3306/student?useSSL=false --username root --password 123456 --table student --hbase-table stu --hbase-create-table --hbase-row-key id --m 1 --column-family cf1

在HBase中创建student表

create 'stu','cf1'

执行脚本

sqoop --options-file MySQLToHBase.conf

export

HDFSToMySQL

编写脚本,并保存为HDFSToMySQL.conf

export --connect jdbc:mysql://master:3306/student?useSSL=false&useUnicode=true&characterEncoding=utf-8 --username root --password 123456 --table student2 -m 1 --columns id,name,age,gender,clazz --export-dir /sqoop/data/student1 --fields-terminated-by ','

先清空MySQL student表中的数据,不然会造成主键冲突

执行脚本

sqoop --options-file HDFSToMySQL.conf

查看sqoop help

sqoop help 21/04/26 15:50:36 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6 usage: sqoop COMMAND [ARGS] Available commands: codegen Generate code to interact with database records create-hive-table Import a table definition into Hive eval Evaluate a SQL statement and display the results export Export an HDFS directory to a database table help List available commands import Import a table from a database to HDFS import-all-tables Import tables from a database to HDFS import-mainframe Import datasets from a mainframe server to HDFS job Work with saved jobs list-databases List available databases on a server list-tables List available tables in a database merge Merge results of incremental imports metastore Run a standalone Sqoop metastore version Display version information See 'sqoop help COMMAND' for information on a specific command.

# 查看import的详细帮助 sqoop import --help

分区导入

-- 创建分区表 CREATE TABLE `partition_student`( `id` int, `name` string, `age` int, `gender` string, `clazz` string, `last_mod` string) COMMENT 'Imported by sqoop on 2023/10/20 14:52:43' partitioned by(dt string) ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe' WITH SERDEPROPERTIES ( 'field.delim'='\t', 'line.delim'='\n', 'serialization.format'='\t');

import --connect jdbc:mysql://master:3306/student?useSSL=false --username root --password 123456 --m 2 --split-by id --e "SELECT * FROM student2 WHERE substr(last_mod,1,11) = '2023-10-21' and $CONDITIONS" --fields-terminated-by "\t" --lines-terminated-by "\n" --target-dir /testQ --hive-import --hive-database testsqoop --hive-table partition_student --hive-partition-key dt --hive-partition-value 2023-10-21

sqoop --options-file insert_10_21_partition_mysql2hive.conf

增量导入

# 模拟做全量数据导入: sqoop import \ --connect 'jdbc:mysql://master:3306/student?useSSL=false' \ --username root \ --password 123456 \ --e "select * from student2 where id

文章版权声明:除非注明,否则均为主机测评原创文章,转载或复制请以超链接形式并注明出处。