k8s(4)

目录

负载均衡部署

做初始化操作:

每台主机添加域名

从 master01 节点上拷贝证书文件、各master组件的配置文件和服务管理文件到 master02 节点:

修改02配置文件kube-apiserver,kube-controller-manager,kube-scheduler中的IP:

在 master02 节点上启动各服务并设置开机自启:

由于我们之前定义了负载均衡器和vip地址,直接开启50,60主机:

给负载均衡器做好初始化操作:

修改nginx配置文件,配置四层反向代理负载均衡,指定k8s群集2台master的节点ip和6443端口:

部署keepalived服务:

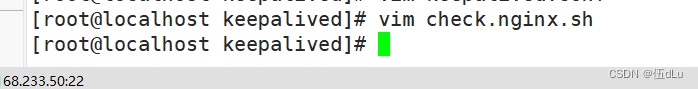

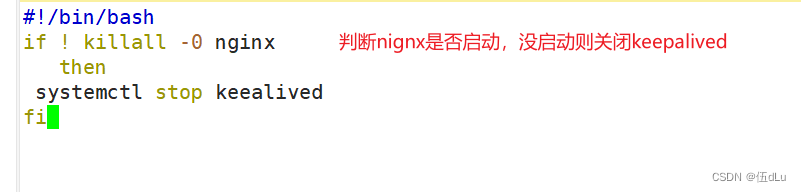

添加检查nginx存活的脚本:

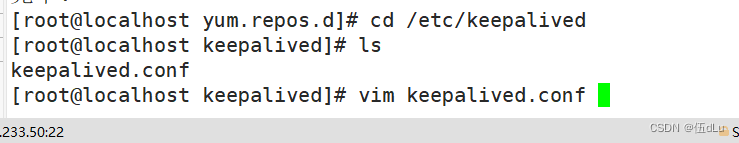

修改keepalived配置文件:

在进行添加一个周期性执行的脚本配置:

启动keepalived:

修改所有node节点上的bootstrap.kubeconfig,kubelet.kubeconfig,kube-proxy.kubeconfig配置文件为VIP:

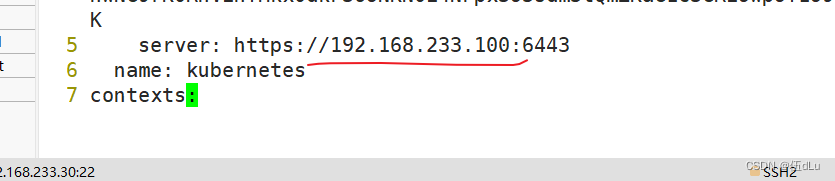

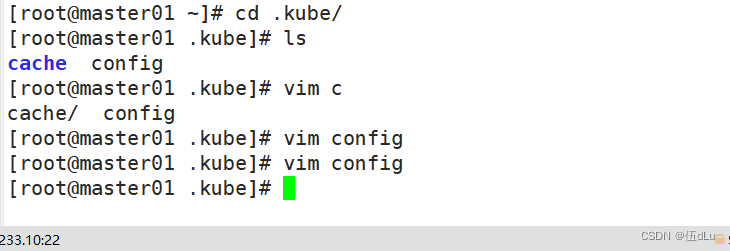

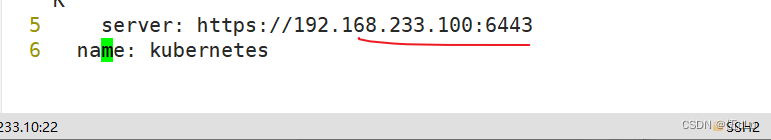

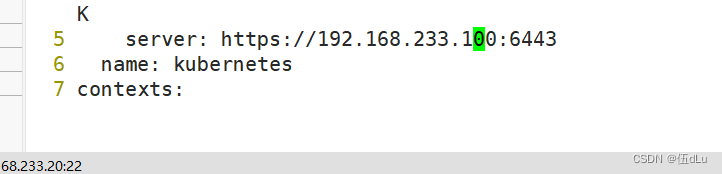

修改master01./kube下的config文件的ip:

将master节点的kube-controller-manager.kubeconig,kube-scheduler.kubeconfig文件的ip指向本机ip:

负载均衡部署

准备master02:192.168.233.20

master尽量三个以上,方便组件选择主及集群的高可用。部署操作都是一样。

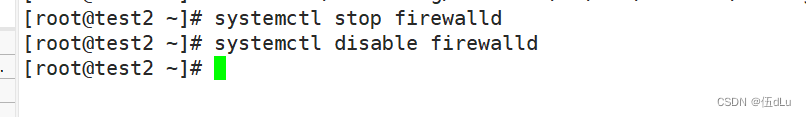

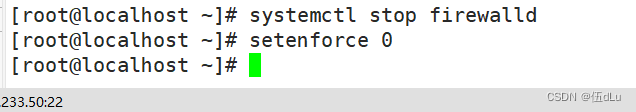

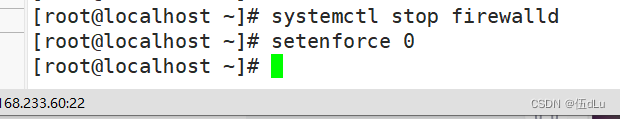

做初始化操作:

systemctl stop firewalld

systemctl disable firewalld

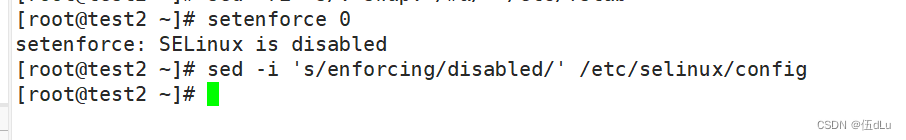

setenforce 0

sed -i 's/enforcing/disabled/' /etc/selinux/config

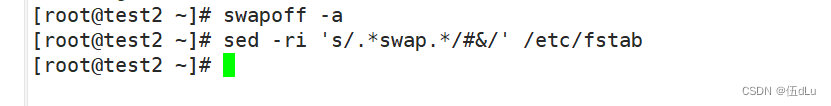

swapoff -a

sed -ri 's/.*swap.*/#&/' /etc/fstab

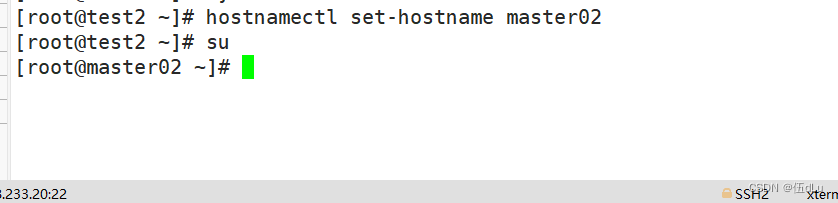

hostnamectl set-hostname master02

每台主机添加域名

cat >> /etc/hosts /etc/sysctl.d/k8s.conf /dev/null

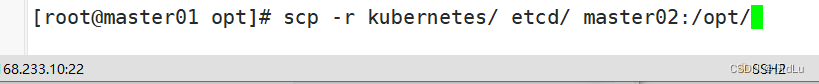

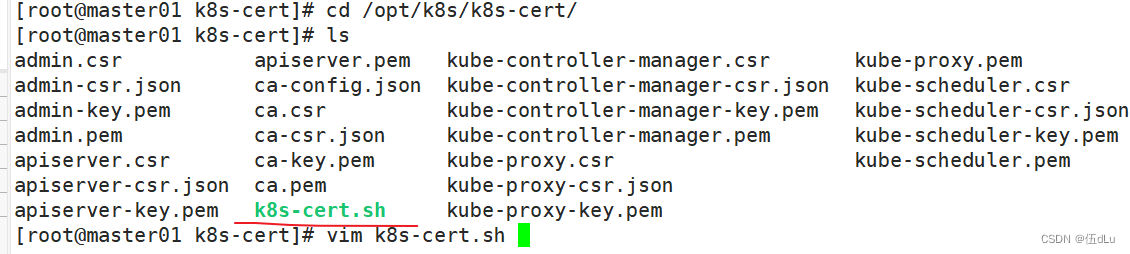

从 master01 节点上拷贝证书文件、各master组件的配置文件和服务管理文件到 master02 节点:

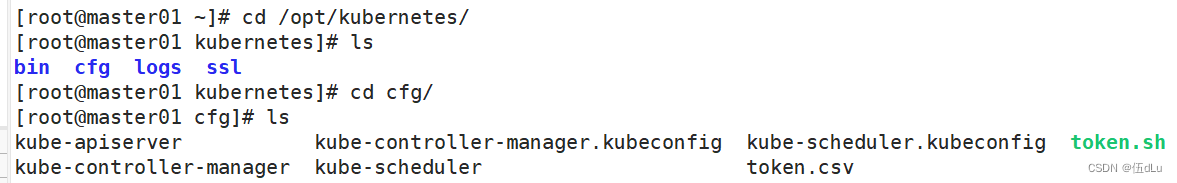

scp -r kubernetes/ etcd/ master02:/opt/

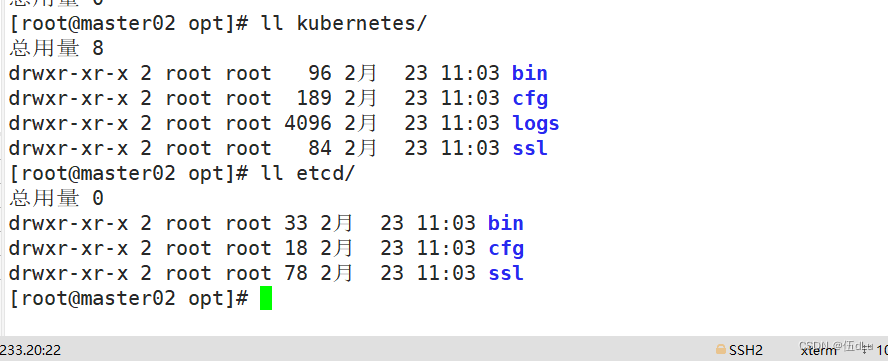

查看master02:

删除日志:

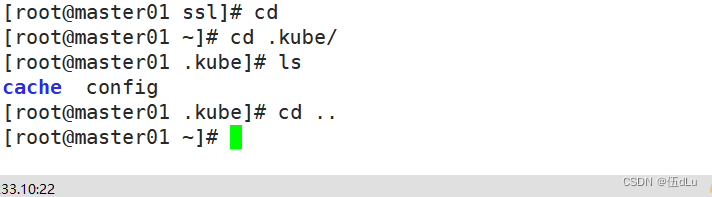

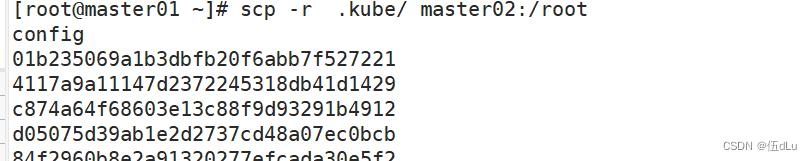

scp -r .kube/ master02:/root

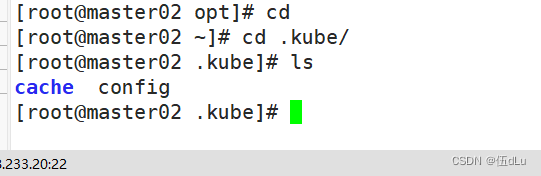

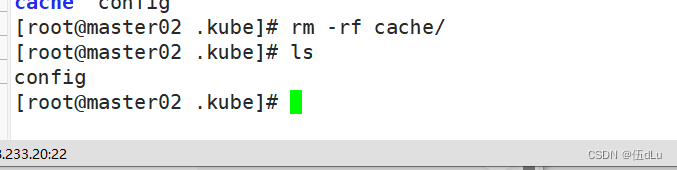

查看并将目录删除:

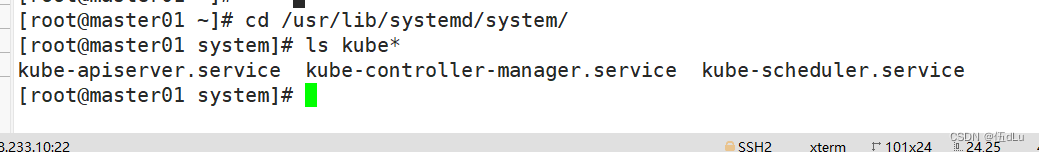

将服务文件复制:

cd /usr/lib/systemd/system

ls kube*

scp kube-* master02:`pwd`

查看02:

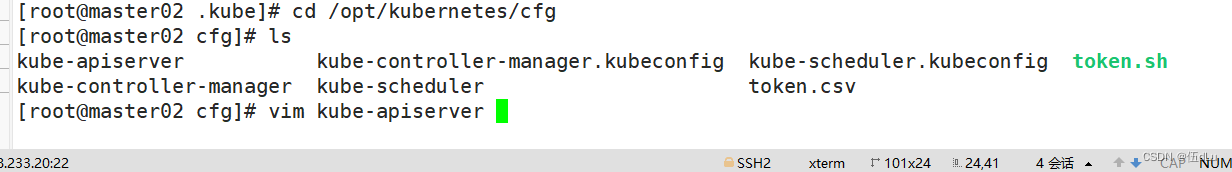

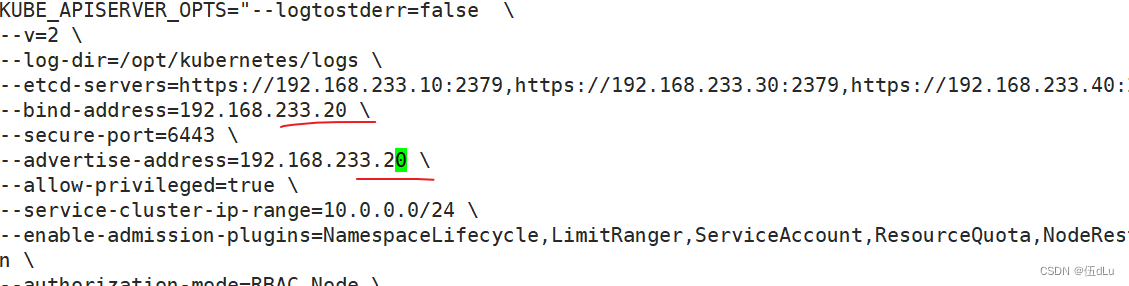

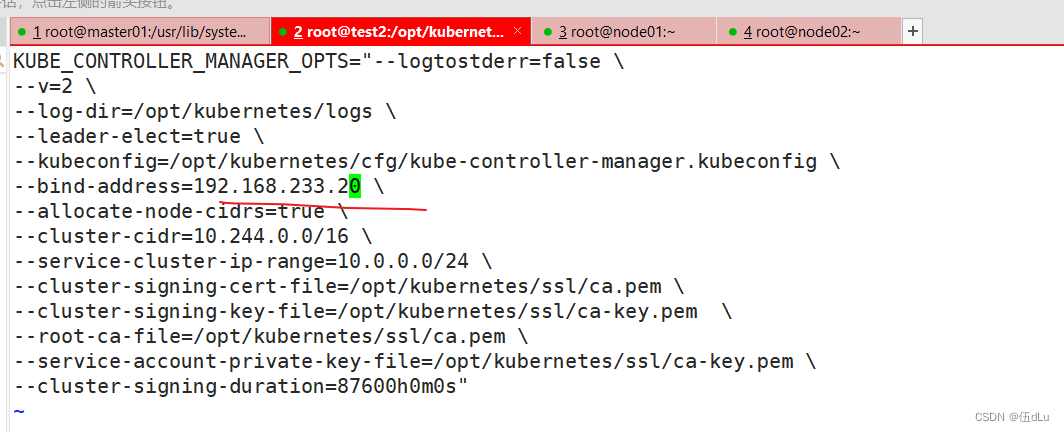

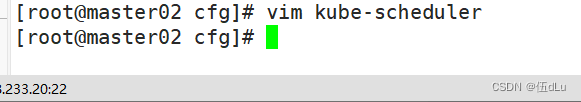

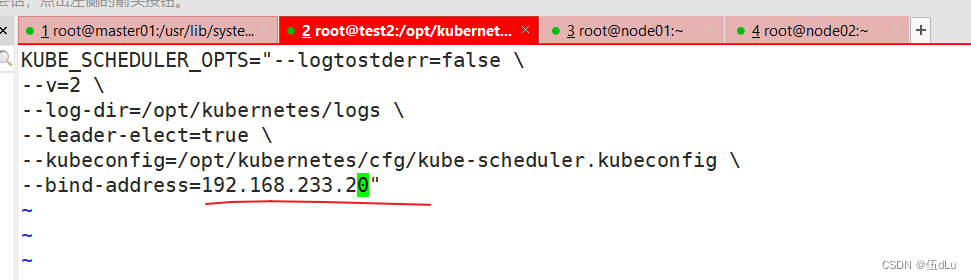

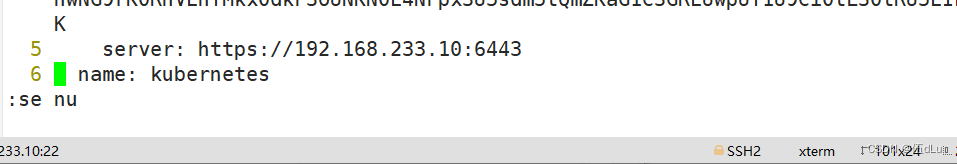

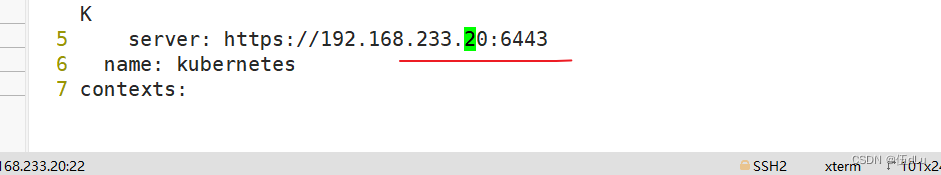

修改02配置文件kube-apiserver,kube-controller-manager,kube-scheduler中的IP:

cd /opt/kubernetes/cfg

vim kube-apiserver

vim kube-controller-manager

vim kube-scheduler

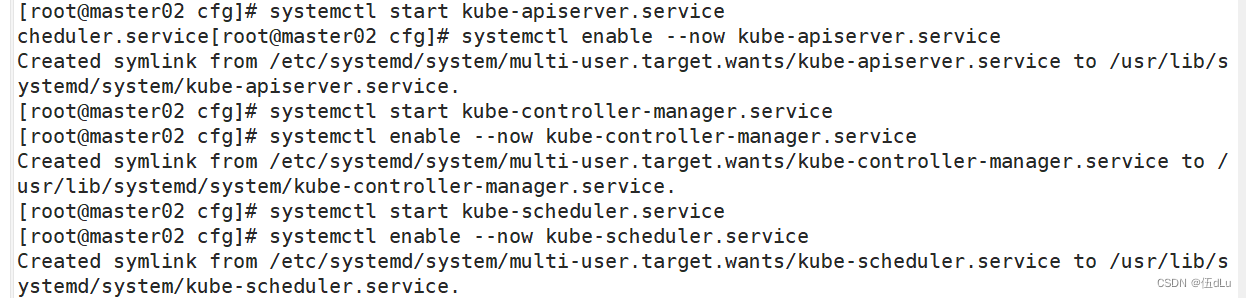

在 master02 节点上启动各服务并设置开机自启:

systemctl start kube-apiserver.service

systemctl enable --now kube-apiserver.service

systemctl start kube-controller-manager.service

systemctl enable --now kube-controller-manager.service

systemctl start kube-scheduler.service

systemctl enable --now kube-scheduler.service

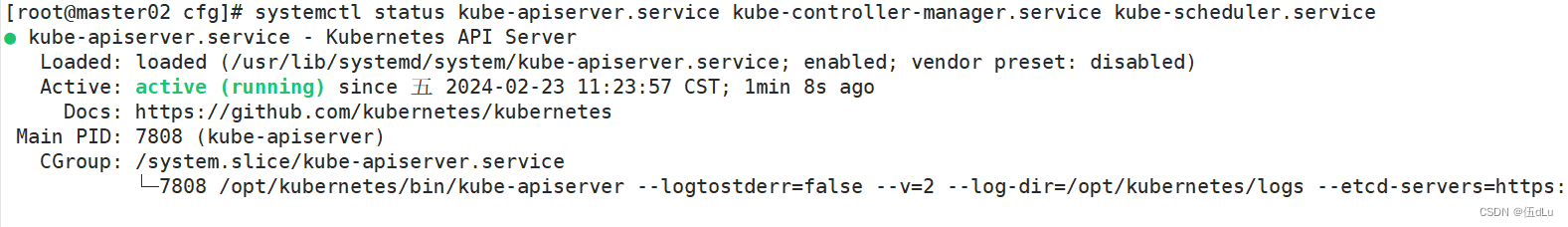

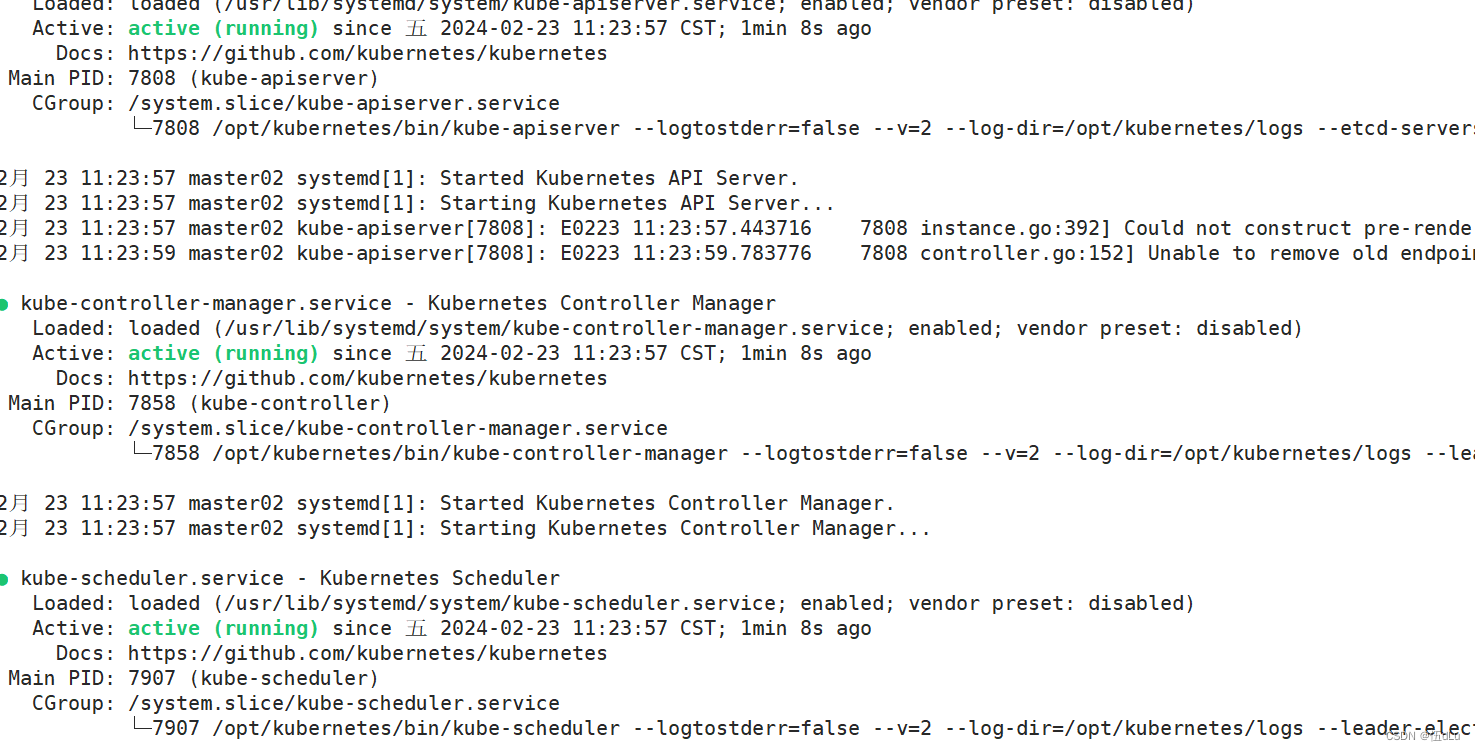

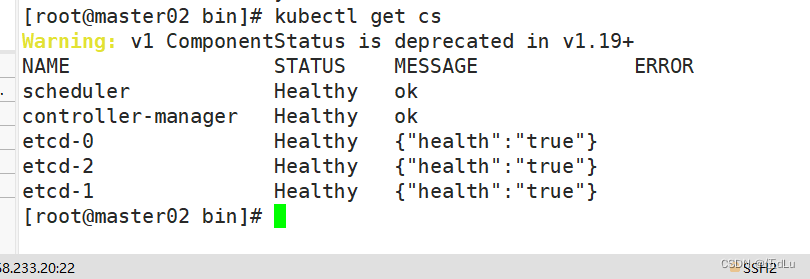

查看状态:

systemctl status kube-apiserver.service kube-controller-manager.service kube-scheduler.service

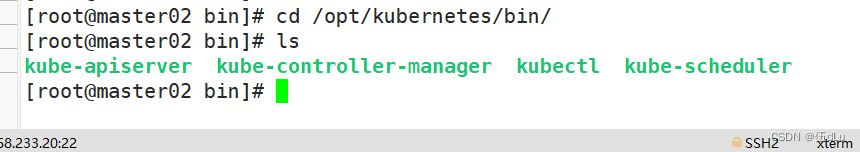

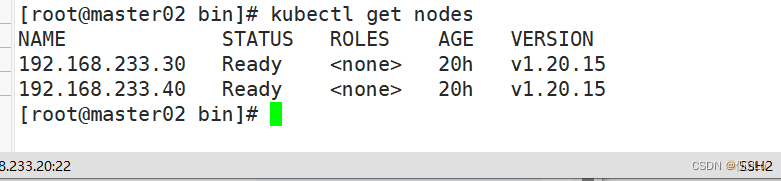

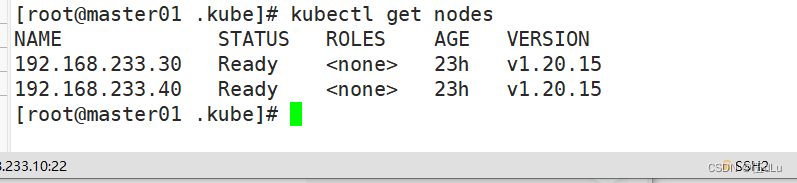

查看node节点状态,做软连接:

cd /opt/kubernetes/bin/

ln -s /opt/kubernetes/bin/* /usr/local/bin/

kubectl get nodes

kubectl get nodes -o wide

由于我们之前定义了负载均衡器和vip地址,直接开启50,60主机:

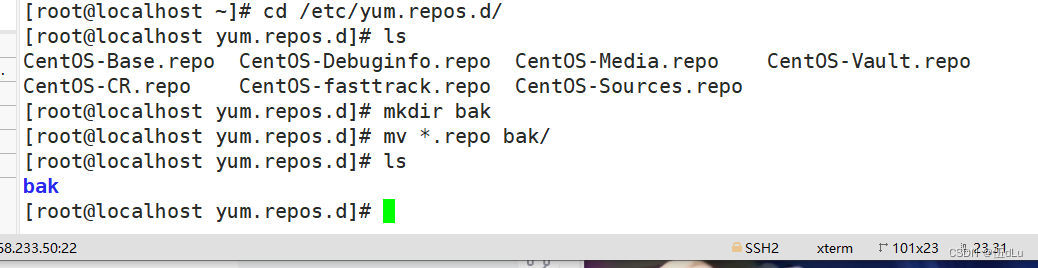

给负载均衡器做好初始化操作:

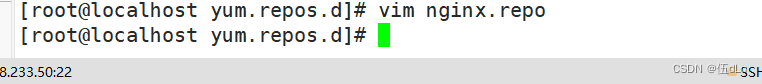

都进行编译安装nginx:

# nginx.repo

[nginx]

name=nginx repo

baseurl=http://nginx.org/packages/centos/$releasever/$basearch/

gpgcheck=1

enabled=1

gpgkey=https://nginx.org/keys/nginx_signing.key

module_hotfixes=true

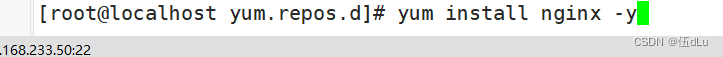

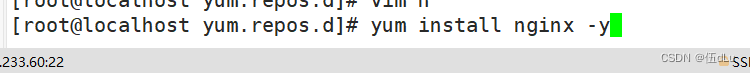

下载nginx:

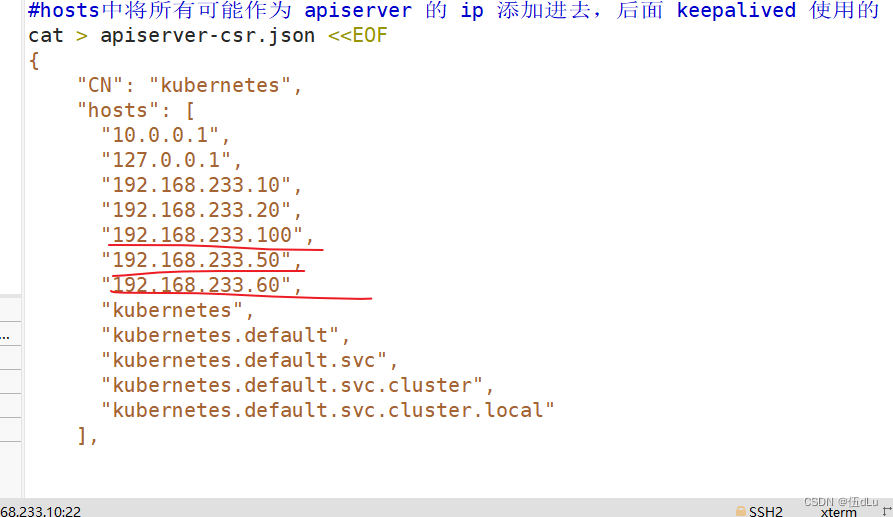

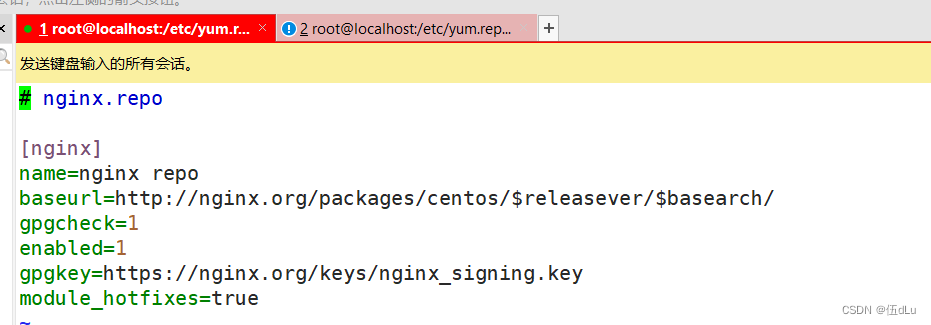

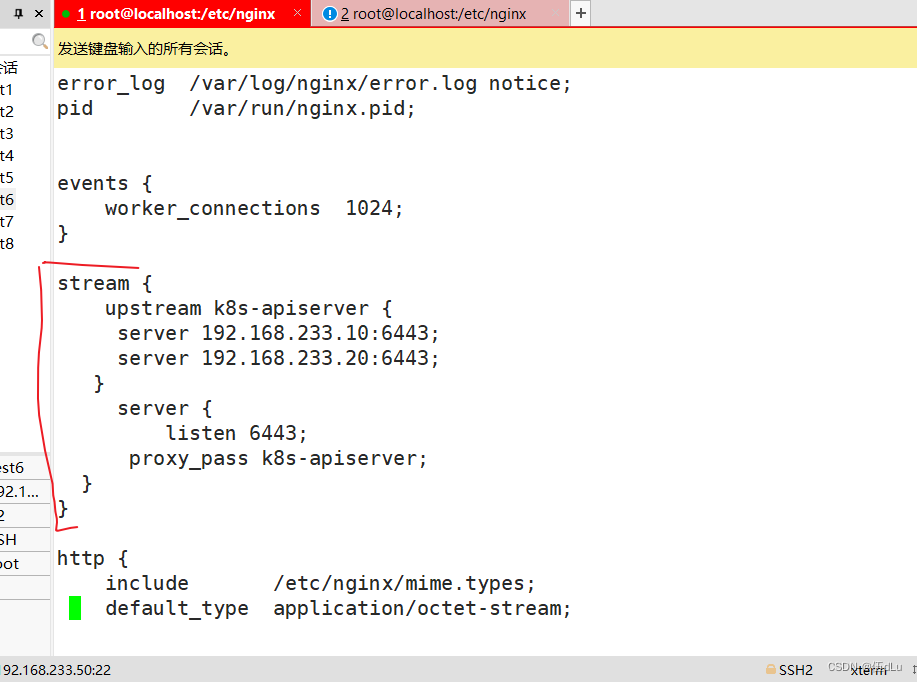

修改nginx配置文件,配置四层反向代理负载均衡,指定k8s群集2台master的节点ip和6443端口:

添加:

stream {

upstream k8s-apiserver {

server 192.168.233.10:6443;

server 192.168.233.20:6443;

}

server {

listen 6443;

proxy_pass k8s-apiserver;

}

}

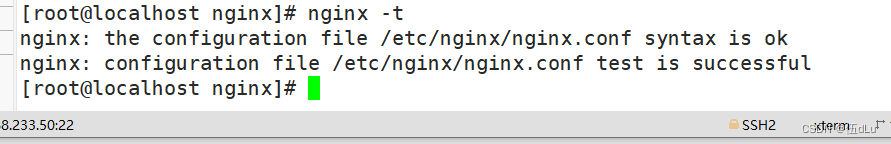

检查配置文件语法:

nginx -t

开启ngixn:

systemctl start nginx

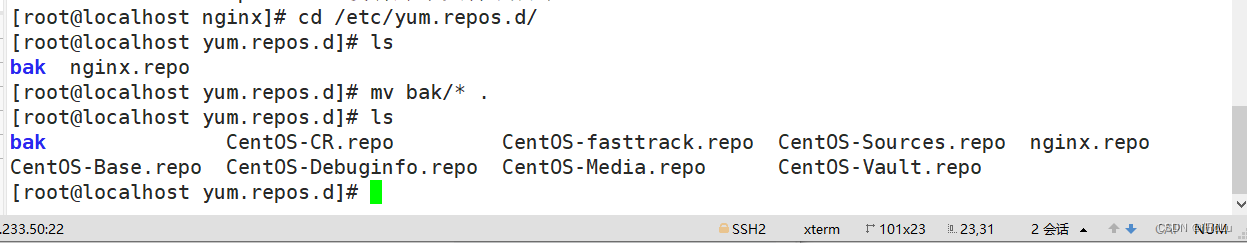

部署keepalived服务:

将在线源移出来进行安装:

mv bak/* .

yum install keepalived -y

添加检查nginx存活的脚本:

#!/bin/bash

if ! killall -0 nginx

then

systemctl stop keealived

fi

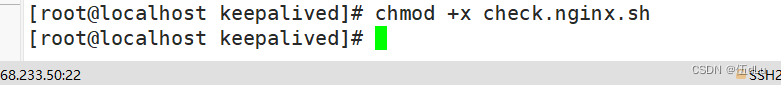

授予脚本权限:

chmod +x check.nginx.sh

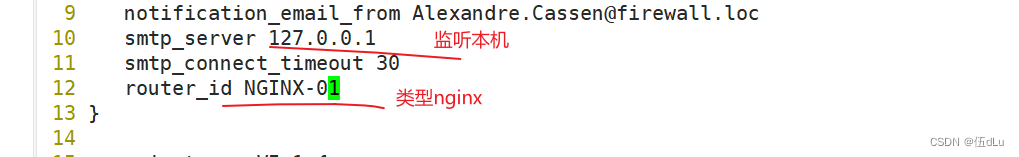

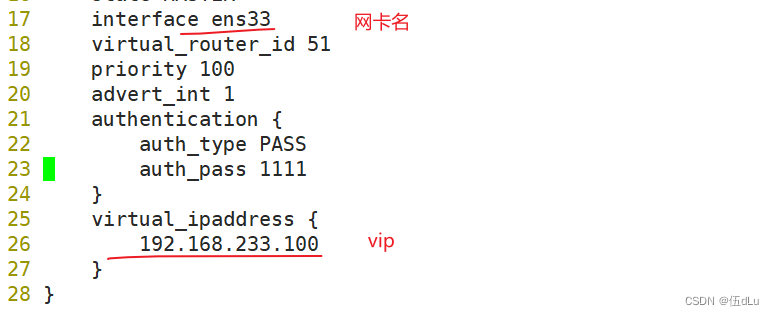

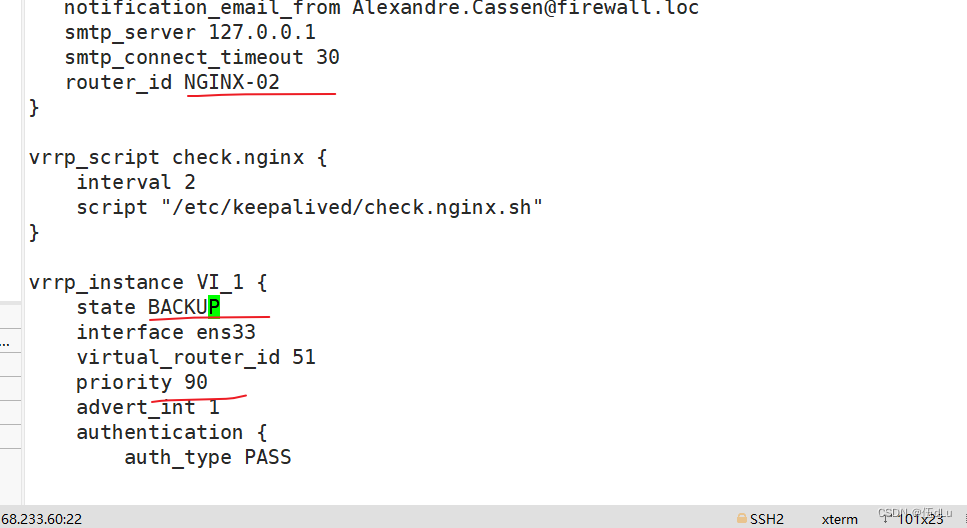

修改keepalived配置文件:

cd /etc/keepalived

vim keepalived.conf

下面全部删除掉。

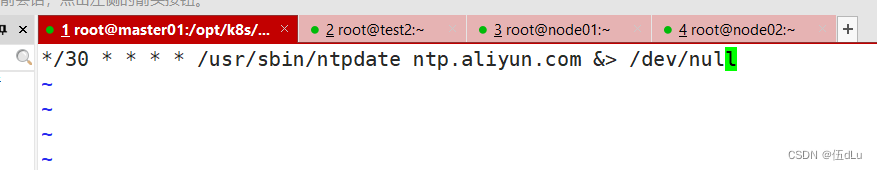

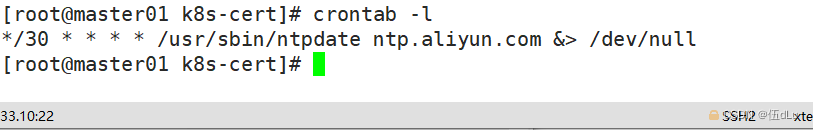

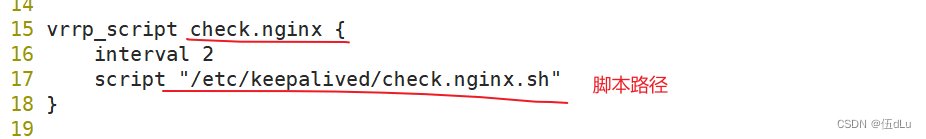

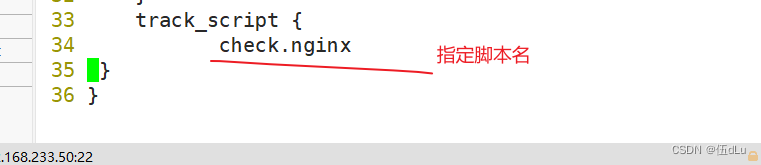

在进行添加一个周期性执行的脚本配置:

vrrp_script check.nginx {

interval 2

script "/etc/keepalived/check.nginx.sh"

}

track_script {

check.nginx

}

同时60作为备节点需要修改一下:

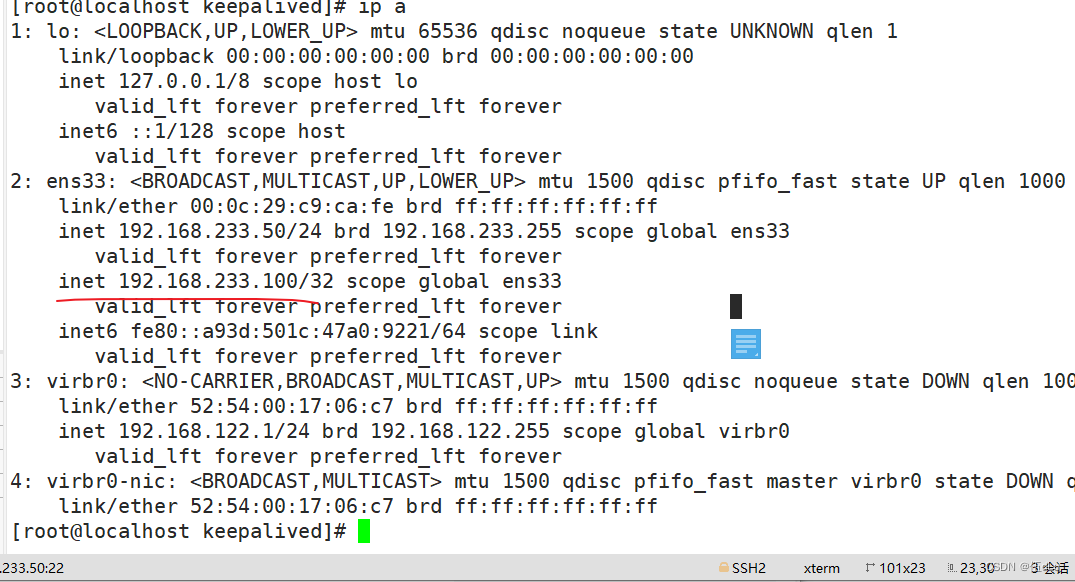

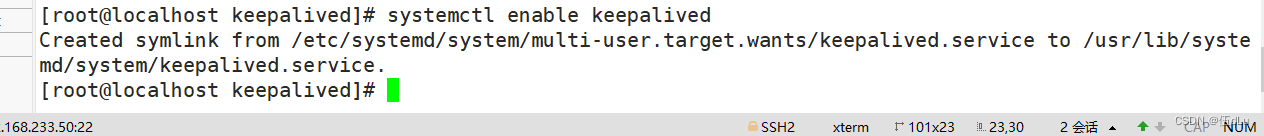

启动keepalived:

systemctl start keepalived

systemctl enable keepalived

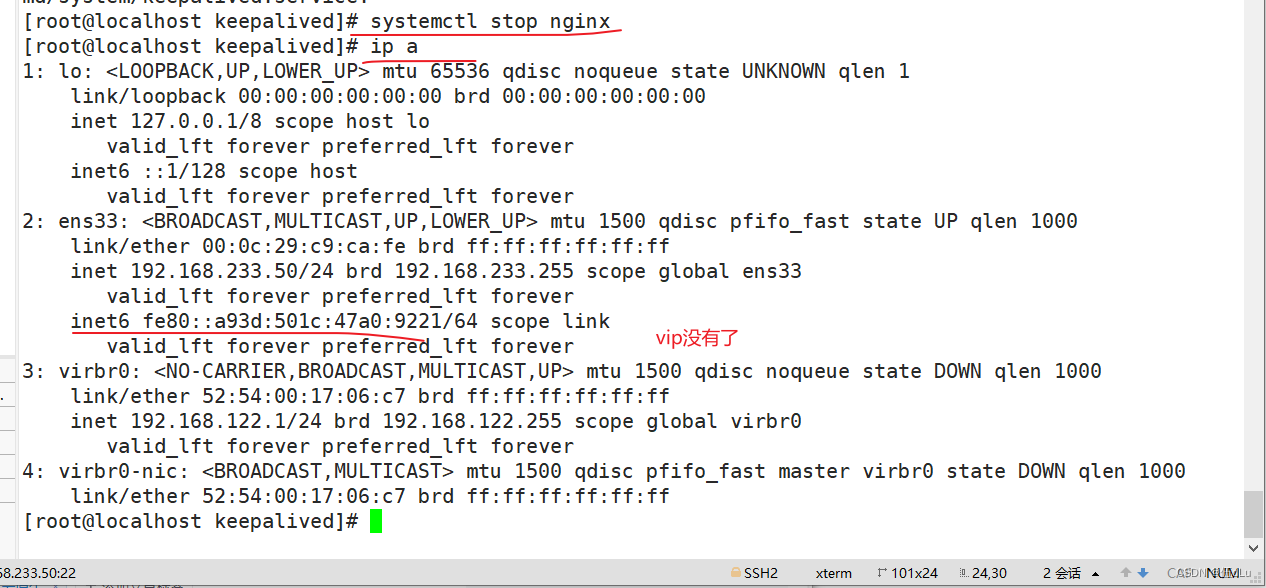

测试关闭主的nginx:

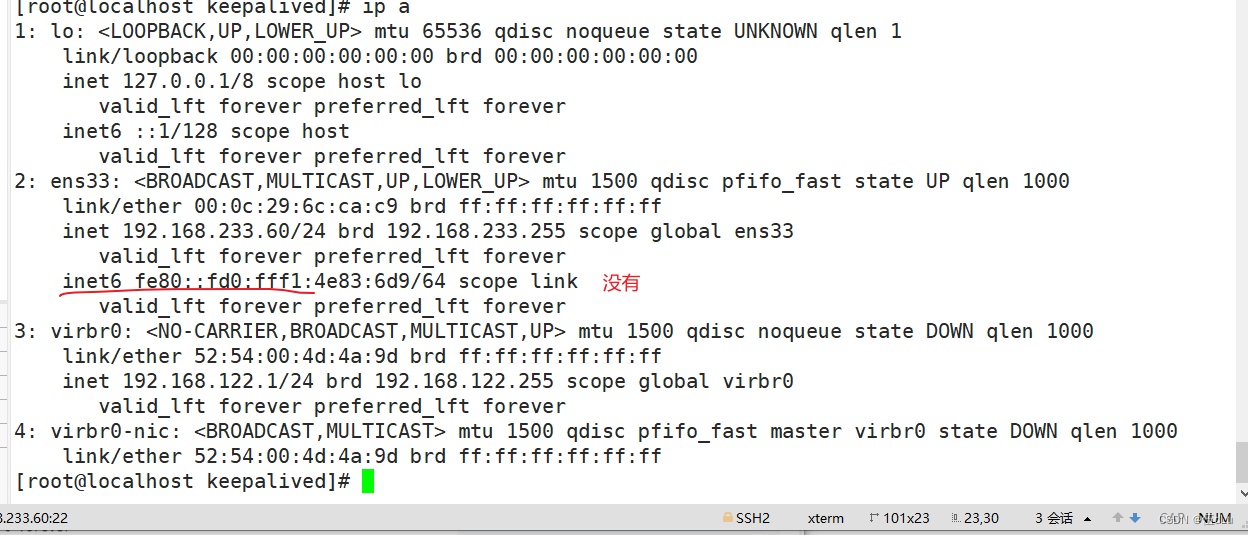

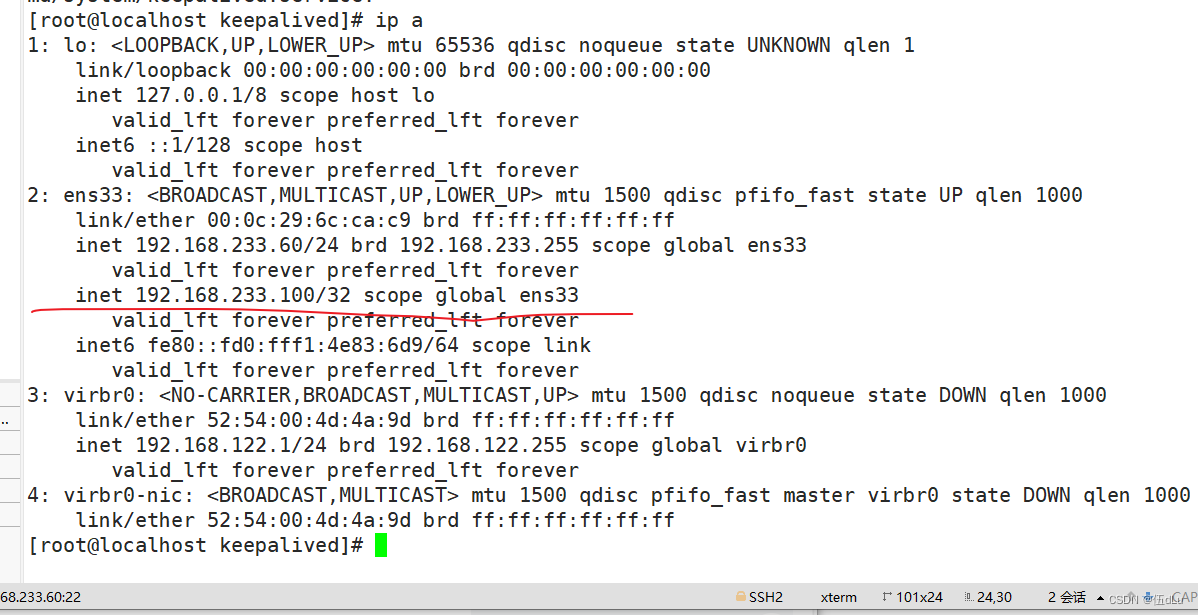

查看备60节点:

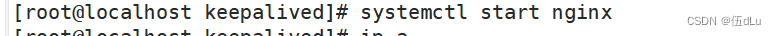

主开启nginx:

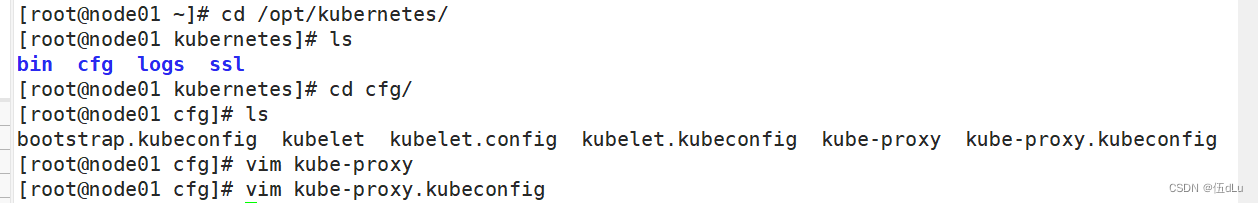

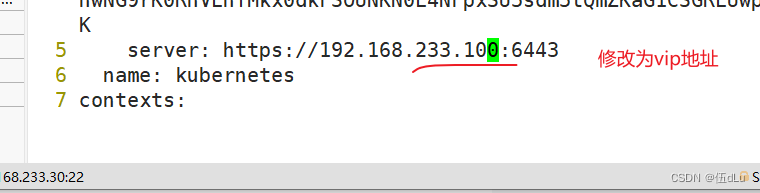

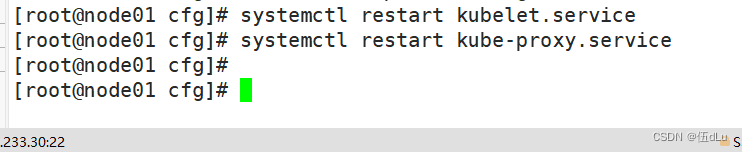

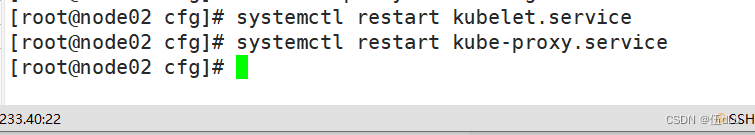

修改所有node节点上的bootstrap.kubeconfig,kubelet.kubeconfig,kube-proxy.kubeconfig配置文件为VIP:

cd /opt/kubernetes/

vim kube-proxy.kubeconfig

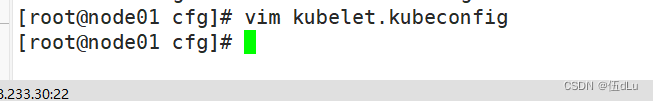

vim kubelet.kubeconfig

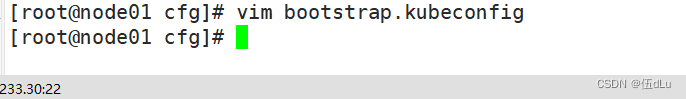

vim bootstrap.kubeconfig

重启kubelet和kube-proxy服务:

systemctl restart kubelet.service

systemctl restart kube-proxy.service

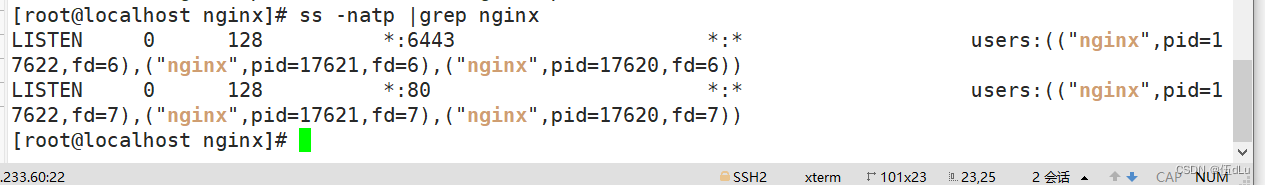

在负载均衡主机上查看nginx连接状态:

netstat -natp | grep nginx

修改master01./kube下的config文件的ip:

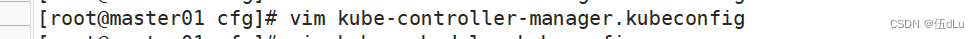

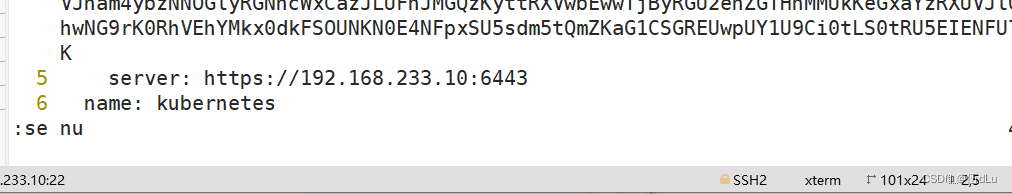

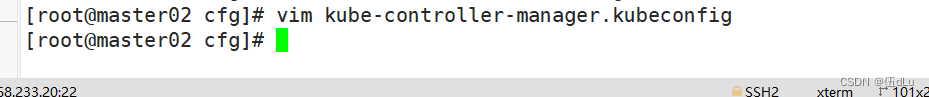

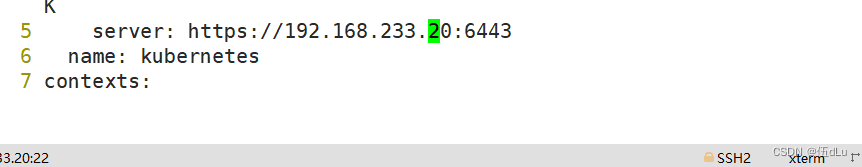

将master节点的kube-controller-manager.kubeconig,kube-scheduler.kubeconfig文件的ip指向本机ip:

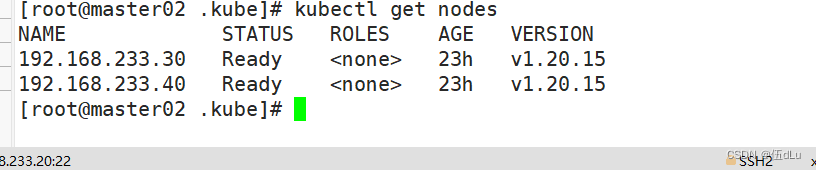

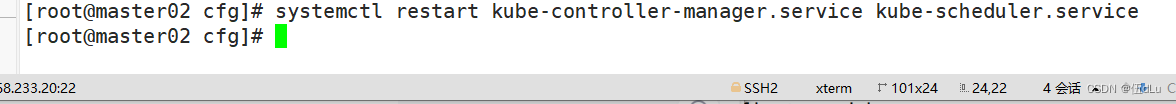

节点02:

重启kube-controller-manager.service,kube-scheduler.service服务:

由于我master01节点的文件不用修改,就不用重启。

systemctl restart kube-controller-manager.service kube-scheduler.service